mirror of

https://github.com/blakeblackshear/frigate.git

synced 2026-02-05 10:45:21 +03:00

Merge remote-tracking branch 'upstream/dev' into 230523-optimize-sync-records

This commit is contained in:

commit

1ec8bc0c46

@ -52,7 +52,9 @@

|

|||||||

"mikestead.dotenv",

|

"mikestead.dotenv",

|

||||||

"csstools.postcss",

|

"csstools.postcss",

|

||||||

"blanu.vscode-styled-jsx",

|

"blanu.vscode-styled-jsx",

|

||||||

"bradlc.vscode-tailwindcss"

|

"bradlc.vscode-tailwindcss",

|

||||||

|

"ms-python.isort",

|

||||||

|

"charliermarsh.ruff"

|

||||||

],

|

],

|

||||||

"settings": {

|

"settings": {

|

||||||

"remote.autoForwardPorts": false,

|

"remote.autoForwardPorts": false,

|

||||||

@ -68,6 +70,7 @@

|

|||||||

"python.testing.unittestArgs": ["-v", "-s", "./frigate/test"],

|

"python.testing.unittestArgs": ["-v", "-s", "./frigate/test"],

|

||||||

"files.trimTrailingWhitespace": true,

|

"files.trimTrailingWhitespace": true,

|

||||||

"eslint.workingDirectories": ["./web"],

|

"eslint.workingDirectories": ["./web"],

|

||||||

|

"isort.args": ["--settings-path=./pyproject.toml"],

|

||||||

"[python]": {

|

"[python]": {

|

||||||

"editor.defaultFormatter": "ms-python.black-formatter",

|

"editor.defaultFormatter": "ms-python.black-formatter",

|

||||||

"editor.formatOnSave": true

|

"editor.formatOnSave": true

|

||||||

|

|||||||

@ -2,6 +2,12 @@

|

|||||||

|

|

||||||

set -euxo pipefail

|

set -euxo pipefail

|

||||||

|

|

||||||

|

# Cleanup the old github host key

|

||||||

|

sed -i -e '/AAAAB3NzaC1yc2EAAAABIwAAAQEAq2A7hRGmdnm9tUDbO9IDSwBK6TbQa+PXYPCPy6rbTrTtw7PHkccKrpp0yVhp5HdEIcKr6pLlVDBfOLX9QUsyCOV0wzfjIJNlGEYsdlLJizHhbn2mUjvSAHQqZETYP81eFzLQNnPHt4EVVUh7VfDESU84KezmD5QlWpXLmvU31\/yMf+Se8xhHTvKSCZIFImWwoG6mbUoWf9nzpIoaSjB+weqqUUmpaaasXVal72J+UX2B+2RPW3RcT0eOzQgqlJL3RKrTJvdsjE3JEAvGq3lGHSZXy28G3skua2SmVi\/w4yCE6gbODqnTWlg7+wC604ydGXA8VJiS5ap43JXiUFFAaQ==/d' ~/.ssh/known_hosts

|

||||||

|

# Add new github host key

|

||||||

|

curl -L https://api.github.com/meta | jq -r '.ssh_keys | .[]' | \

|

||||||

|

sed -e 's/^/github.com /' >> ~/.ssh/known_hosts

|

||||||

|

|

||||||

# Frigate normal container runs as root, so it have permission to create

|

# Frigate normal container runs as root, so it have permission to create

|

||||||

# the folders. But the devcontainer runs as the host user, so we need to

|

# the folders. But the devcontainer runs as the host user, so we need to

|

||||||

# create the folders and give the host user permission to write to them.

|

# create the folders and give the host user permission to write to them.

|

||||||

|

|||||||

35

.github/workflows/pull_request.yml

vendored

35

.github/workflows/pull_request.yml

vendored

@ -65,24 +65,26 @@ jobs:

|

|||||||

- name: Check out the repository

|

- name: Check out the repository

|

||||||

uses: actions/checkout@v3

|

uses: actions/checkout@v3

|

||||||

- name: Set up Python ${{ env.DEFAULT_PYTHON }}

|

- name: Set up Python ${{ env.DEFAULT_PYTHON }}

|

||||||

uses: actions/setup-python@v4.6.0

|

uses: actions/setup-python@v4.6.1

|

||||||

with:

|

with:

|

||||||

python-version: ${{ env.DEFAULT_PYTHON }}

|

python-version: ${{ env.DEFAULT_PYTHON }}

|

||||||

- name: Install requirements

|

- name: Install requirements

|

||||||

run: |

|

run: |

|

||||||

pip install pip

|

python3 -m pip install -U pip

|

||||||

pip install -r requirements-dev.txt

|

python3 -m pip install -r requirements-dev.txt

|

||||||

- name: Lint

|

- name: Check black

|

||||||

run: |

|

run: |

|

||||||

python3 -m black frigate --check

|

black --check --diff frigate migrations docker *.py

|

||||||

|

- name: Check isort

|

||||||

|

run: |

|

||||||

|

isort --check --diff frigate migrations docker *.py

|

||||||

|

- name: Check ruff

|

||||||

|

run: |

|

||||||

|

ruff check frigate migrations docker *.py

|

||||||

|

|

||||||

python_tests:

|

python_tests:

|

||||||

runs-on: ubuntu-latest

|

runs-on: ubuntu-latest

|

||||||

name: Python Tests

|

name: Python Tests

|

||||||

strategy:

|

|

||||||

fail-fast: false

|

|

||||||

matrix:

|

|

||||||

platform: [amd64,arm64]

|

|

||||||

steps:

|

steps:

|

||||||

- name: Check out code

|

- name: Check out code

|

||||||

uses: actions/checkout@v3

|

uses: actions/checkout@v3

|

||||||

@ -94,22 +96,13 @@ jobs:

|

|||||||

- name: Build web

|

- name: Build web

|

||||||

run: npm run build

|

run: npm run build

|

||||||

working-directory: ./web

|

working-directory: ./web

|

||||||

- run: make version

|

|

||||||

- name: Set up QEMU

|

- name: Set up QEMU

|

||||||

uses: docker/setup-qemu-action@v2

|

uses: docker/setup-qemu-action@v2

|

||||||

- name: Set up Docker Buildx

|

- name: Set up Docker Buildx

|

||||||

uses: docker/setup-buildx-action@v2

|

uses: docker/setup-buildx-action@v2

|

||||||

- name: Build

|

- name: Build

|

||||||

uses: docker/build-push-action@v4

|

run: make

|

||||||

with:

|

|

||||||

context: .

|

|

||||||

push: false

|

|

||||||

load: true

|

|

||||||

platforms: linux/${{ matrix.platform }}

|

|

||||||

tags: |

|

|

||||||

frigate:${{ matrix.platform }}

|

|

||||||

target: frigate

|

|

||||||

- name: Run mypy

|

- name: Run mypy

|

||||||

run: docker run --platform linux/${{ matrix.platform }} --rm --entrypoint=python3 frigate:${{ matrix.platform }} -u -m mypy --config-file frigate/mypy.ini frigate

|

run: docker run --rm --entrypoint=python3 frigate:latest -u -m mypy --config-file frigate/mypy.ini frigate

|

||||||

- name: Run tests

|

- name: Run tests

|

||||||

run: docker run --platform linux/${{ matrix.platform }} --rm --entrypoint=python3 frigate:${{ matrix.platform }} -u -m unittest

|

run: docker run --rm --entrypoint=python3 frigate:latest -u -m unittest

|

||||||

|

|||||||

@ -227,8 +227,8 @@ CMD ["sleep", "infinity"]

|

|||||||

|

|

||||||

|

|

||||||

# Frigate web build

|

# Frigate web build

|

||||||

# force this to run on amd64 because QEMU is painfully slow

|

# This should be architecture agnostic, so speed up the build on multiarch by not using QEMU.

|

||||||

FROM --platform=linux/amd64 node:16 AS web-build

|

FROM --platform=$BUILDPLATFORM node:16 AS web-build

|

||||||

|

|

||||||

WORKDIR /work

|

WORKDIR /work

|

||||||

COPY web/package.json web/package-lock.json ./

|

COPY web/package.json web/package-lock.json ./

|

||||||

|

|||||||

12

benchmark.py

12

benchmark.py

@ -1,11 +1,11 @@

|

|||||||

import os

|

|

||||||

from statistics import mean

|

|

||||||

import multiprocessing as mp

|

|

||||||

import numpy as np

|

|

||||||

import datetime

|

import datetime

|

||||||

|

import multiprocessing as mp

|

||||||

|

from statistics import mean

|

||||||

|

|

||||||

|

import numpy as np

|

||||||

|

|

||||||

from frigate.config import DetectorTypeEnum

|

from frigate.config import DetectorTypeEnum

|

||||||

from frigate.object_detection import (

|

from frigate.object_detection import (

|

||||||

LocalObjectDetector,

|

|

||||||

ObjectDetectProcess,

|

ObjectDetectProcess,

|

||||||

RemoteObjectDetector,

|

RemoteObjectDetector,

|

||||||

load_labels,

|

load_labels,

|

||||||

@ -53,7 +53,7 @@ def start(id, num_detections, detection_queue, event):

|

|||||||

frame_times = []

|

frame_times = []

|

||||||

for x in range(0, num_detections):

|

for x in range(0, num_detections):

|

||||||

start_frame = datetime.datetime.now().timestamp()

|

start_frame = datetime.datetime.now().timestamp()

|

||||||

detections = object_detector.detect(my_frame)

|

object_detector.detect(my_frame)

|

||||||

frame_times.append(datetime.datetime.now().timestamp() - start_frame)

|

frame_times.append(datetime.datetime.now().timestamp() - start_frame)

|

||||||

|

|

||||||

duration = datetime.datetime.now().timestamp() - start

|

duration = datetime.datetime.now().timestamp() - start

|

||||||

|

|||||||

@ -3,11 +3,14 @@

|

|||||||

import json

|

import json

|

||||||

import os

|

import os

|

||||||

import sys

|

import sys

|

||||||

|

|

||||||

import yaml

|

import yaml

|

||||||

|

|

||||||

sys.path.insert(0, "/opt/frigate")

|

sys.path.insert(0, "/opt/frigate")

|

||||||

from frigate.const import BIRDSEYE_PIPE, BTBN_PATH

|

from frigate.const import BIRDSEYE_PIPE, BTBN_PATH # noqa: E402

|

||||||

from frigate.ffmpeg_presets import parse_preset_hardware_acceleration_encode

|

from frigate.ffmpeg_presets import ( # noqa: E402

|

||||||

|

parse_preset_hardware_acceleration_encode,

|

||||||

|

)

|

||||||

|

|

||||||

sys.path.remove("/opt/frigate")

|

sys.path.remove("/opt/frigate")

|

||||||

|

|

||||||

|

|||||||

@ -172,6 +172,27 @@ http {

|

|||||||

root /media/frigate;

|

root /media/frigate;

|

||||||

}

|

}

|

||||||

|

|

||||||

|

location /exports/ {

|

||||||

|

add_header 'Access-Control-Allow-Origin' "$http_origin" always;

|

||||||

|

add_header 'Access-Control-Allow-Credentials' 'true';

|

||||||

|

add_header 'Access-Control-Expose-Headers' 'Content-Length';

|

||||||

|

if ($request_method = 'OPTIONS') {

|

||||||

|

add_header 'Access-Control-Allow-Origin' "$http_origin";

|

||||||

|

add_header 'Access-Control-Max-Age' 1728000;

|

||||||

|

add_header 'Content-Type' 'text/plain charset=UTF-8';

|

||||||

|

add_header 'Content-Length' 0;

|

||||||

|

return 204;

|

||||||

|

}

|

||||||

|

|

||||||

|

types {

|

||||||

|

video/mp4 mp4;

|

||||||

|

}

|

||||||

|

|

||||||

|

autoindex on;

|

||||||

|

autoindex_format json;

|

||||||

|

root /media/frigate;

|

||||||

|

}

|

||||||

|

|

||||||

location /ws {

|

location /ws {

|

||||||

proxy_pass http://mqtt_ws/;

|

proxy_pass http://mqtt_ws/;

|

||||||

proxy_http_version 1.1;

|

proxy_http_version 1.1;

|

||||||

|

|||||||

@ -2,4 +2,4 @@

|

|||||||

|

|

||||||

This website is built using [Docusaurus 2](https://v2.docusaurus.io/), a modern static website generator.

|

This website is built using [Docusaurus 2](https://v2.docusaurus.io/), a modern static website generator.

|

||||||

|

|

||||||

For installation and contributing instructions, please follow the [Contributing Docs](https://blakeblackshear.github.io/frigate/contributing).

|

For installation and contributing instructions, please follow the [Contributing Docs](https://docs.frigate.video/development/contributing).

|

||||||

|

|||||||

@ -80,3 +80,7 @@ record:

|

|||||||

dog: 2

|

dog: 2

|

||||||

car: 7

|

car: 7

|

||||||

```

|

```

|

||||||

|

|

||||||

|

## How do I export recordings?

|

||||||

|

|

||||||

|

The export page in the Frigate WebUI allows for exporting real time clips with a designated start and stop time as well as exporting a timelapse for a designated start and stop time. These exports can take a while so it is important to leave the file until it is no longer in progress.

|

||||||

|

|||||||

@ -24,6 +24,7 @@ Frigate uses the following locations for read/write operations in the container.

|

|||||||

- `/config`: Used to store the Frigate config file and sqlite database. You will also see a few files alongside the database file while Frigate is running.

|

- `/config`: Used to store the Frigate config file and sqlite database. You will also see a few files alongside the database file while Frigate is running.

|

||||||

- `/media/frigate/clips`: Used for snapshot storage. In the future, it will likely be renamed from `clips` to `snapshots`. The file structure here cannot be modified and isn't intended to be browsed or managed manually.

|

- `/media/frigate/clips`: Used for snapshot storage. In the future, it will likely be renamed from `clips` to `snapshots`. The file structure here cannot be modified and isn't intended to be browsed or managed manually.

|

||||||

- `/media/frigate/recordings`: Internal system storage for recording segments. The file structure here cannot be modified and isn't intended to be browsed or managed manually.

|

- `/media/frigate/recordings`: Internal system storage for recording segments. The file structure here cannot be modified and isn't intended to be browsed or managed manually.

|

||||||

|

- `/media/frigate/exports`: Storage for clips and timelapses that have been exported via the WebUI or API.

|

||||||

- `/tmp/cache`: Cache location for recording segments. Initial recordings are written here before being checked and converted to mp4 and moved to the recordings folder.

|

- `/tmp/cache`: Cache location for recording segments. Initial recordings are written here before being checked and converted to mp4 and moved to the recordings folder.

|

||||||

- `/dev/shm`: It is not recommended to modify this directory or map it with docker. This is the location for raw decoded frames in shared memory and it's size is impacted by the `shm-size` calculations below.

|

- `/dev/shm`: It is not recommended to modify this directory or map it with docker. This is the location for raw decoded frames in shared memory and it's size is impacted by the `shm-size` calculations below.

|

||||||

|

|

||||||

@ -221,7 +222,7 @@ These settings were tested on DSM 7.1.1-42962 Update 4

|

|||||||

|

|

||||||

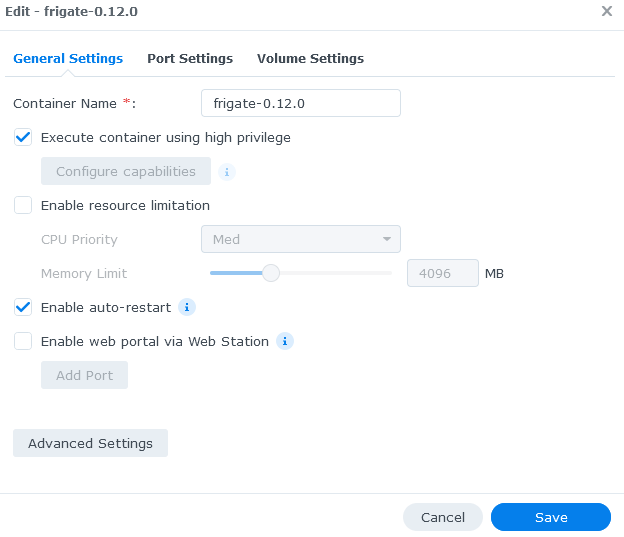

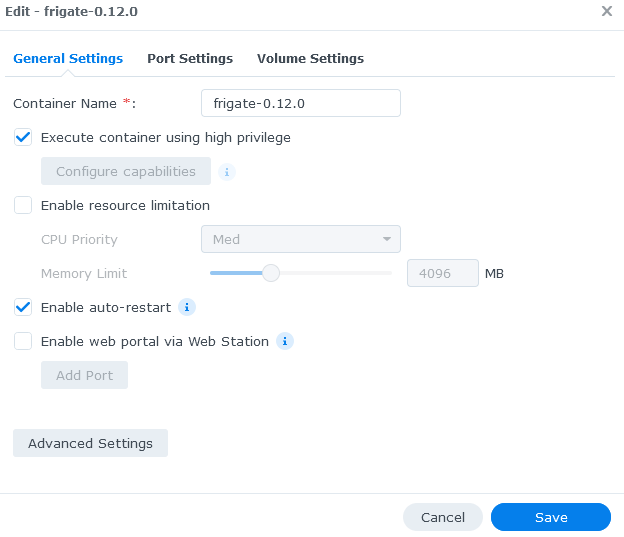

The `Execute container using high privilege` option needs to be enabled in order to give the frigate container the elevated privileges it may need.

|

The `Execute container using high privilege` option needs to be enabled in order to give the frigate container the elevated privileges it may need.

|

||||||

|

|

||||||

The `Enable auto-restart` option can be enabled if you want the container to automatically restart whenever it improperly shuts down due to an error.

|

The `Enable auto-restart` option can be enabled if you want the container to automatically restart whenever it improperly shuts down due to an error.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|||||||

@ -271,6 +271,20 @@ HTTP Live Streaming Video on Demand URL for the specified event. Can be viewed i

|

|||||||

|

|

||||||

HTTP Live Streaming Video on Demand URL for the camera with the specified time range. Can be viewed in an application like VLC.

|

HTTP Live Streaming Video on Demand URL for the camera with the specified time range. Can be viewed in an application like VLC.

|

||||||

|

|

||||||

|

### `POST /api/export/<camera>/start/<start-timestamp>/end/<end-timestamp>`

|

||||||

|

|

||||||

|

Export recordings from `start-timestamp` to `end-timestamp` for `camera` as a single mp4 file. These recordings will be exported to the `/media/frigate/exports` folder.

|

||||||

|

|

||||||

|

It is also possible to export this recording as a timelapse.

|

||||||

|

|

||||||

|

**Optional Body:**

|

||||||

|

|

||||||

|

```json

|

||||||

|

{

|

||||||

|

"playback": "realtime", // playback factor: realtime or timelapse_25x

|

||||||

|

}

|

||||||

|

```

|

||||||

|

|

||||||

### `GET /api/<camera_name>/recordings/summary`

|

### `GET /api/<camera_name>/recordings/summary`

|

||||||

|

|

||||||

Hourly summary of recordings data for a camera.

|

Hourly summary of recordings data for a camera.

|

||||||

|

|||||||

1111

docs/package-lock.json

generated

1111

docs/package-lock.json

generated

File diff suppressed because it is too large

Load Diff

@ -14,8 +14,8 @@

|

|||||||

"write-heading-ids": "docusaurus write-heading-ids"

|

"write-heading-ids": "docusaurus write-heading-ids"

|

||||||

},

|

},

|

||||||

"dependencies": {

|

"dependencies": {

|

||||||

"@docusaurus/core": "^2.4.0",

|

"@docusaurus/core": "^2.4.1",

|

||||||

"@docusaurus/preset-classic": "^2.4.0",

|

"@docusaurus/preset-classic": "^2.4.1",

|

||||||

"@mdx-js/react": "^1.6.22",

|

"@mdx-js/react": "^1.6.22",

|

||||||

"clsx": "^1.2.1",

|

"clsx": "^1.2.1",

|

||||||

"prism-react-renderer": "^1.3.5",

|

"prism-react-renderer": "^1.3.5",

|

||||||

|

|||||||

@ -1,13 +1,14 @@

|

|||||||

import faulthandler

|

import faulthandler

|

||||||

from flask import cli

|

|

||||||

|

|

||||||

faulthandler.enable()

|

|

||||||

import threading

|

import threading

|

||||||

|

|

||||||

threading.current_thread().name = "frigate"

|

from flask import cli

|

||||||

|

|

||||||

from frigate.app import FrigateApp

|

from frigate.app import FrigateApp

|

||||||

|

|

||||||

|

faulthandler.enable()

|

||||||

|

|

||||||

|

threading.current_thread().name = "frigate"

|

||||||

|

|

||||||

cli.show_server_banner = lambda *x: None

|

cli.show_server_banner = lambda *x: None

|

||||||

|

|

||||||

if __name__ == "__main__":

|

if __name__ == "__main__":

|

||||||

|

|||||||

@ -1,16 +1,16 @@

|

|||||||

import logging

|

import logging

|

||||||

import multiprocessing as mp

|

import multiprocessing as mp

|

||||||

from multiprocessing.queues import Queue

|

|

||||||

from multiprocessing.synchronize import Event as MpEvent

|

|

||||||

import os

|

import os

|

||||||

import shutil

|

import shutil

|

||||||

import signal

|

import signal

|

||||||

import sys

|

import sys

|

||||||

from typing import Optional

|

|

||||||

from types import FrameType

|

|

||||||

import psutil

|

|

||||||

|

|

||||||

import traceback

|

import traceback

|

||||||

|

from multiprocessing.queues import Queue

|

||||||

|

from multiprocessing.synchronize import Event as MpEvent

|

||||||

|

from types import FrameType

|

||||||

|

from typing import Optional

|

||||||

|

|

||||||

|

import psutil

|

||||||

from peewee_migrate import Router

|

from peewee_migrate import Router

|

||||||

from playhouse.sqlite_ext import SqliteExtDatabase

|

from playhouse.sqlite_ext import SqliteExtDatabase

|

||||||

from playhouse.sqliteq import SqliteQueueDatabase

|

from playhouse.sqliteq import SqliteQueueDatabase

|

||||||

@ -24,16 +24,17 @@ from frigate.const import (

|

|||||||

CLIPS_DIR,

|

CLIPS_DIR,

|

||||||

CONFIG_DIR,

|

CONFIG_DIR,

|

||||||

DEFAULT_DB_PATH,

|

DEFAULT_DB_PATH,

|

||||||

|

EXPORT_DIR,

|

||||||

MODEL_CACHE_DIR,

|

MODEL_CACHE_DIR,

|

||||||

RECORD_DIR,

|

RECORD_DIR,

|

||||||

)

|

)

|

||||||

from frigate.object_detection import ObjectDetectProcess

|

|

||||||

from frigate.events.cleanup import EventCleanup

|

from frigate.events.cleanup import EventCleanup

|

||||||

from frigate.events.external import ExternalEventProcessor

|

from frigate.events.external import ExternalEventProcessor

|

||||||

from frigate.events.maintainer import EventProcessor

|

from frigate.events.maintainer import EventProcessor

|

||||||

from frigate.http import create_app

|

from frigate.http import create_app

|

||||||

from frigate.log import log_process, root_configurer

|

from frigate.log import log_process, root_configurer

|

||||||

from frigate.models import Event, Recordings, Timeline

|

from frigate.models import Event, Recordings, Timeline

|

||||||

|

from frigate.object_detection import ObjectDetectProcess

|

||||||

from frigate.object_processing import TrackedObjectProcessor

|

from frigate.object_processing import TrackedObjectProcessor

|

||||||

from frigate.output import output_frames

|

from frigate.output import output_frames

|

||||||

from frigate.plus import PlusApi

|

from frigate.plus import PlusApi

|

||||||

@ -42,10 +43,10 @@ from frigate.record.record import manage_recordings

|

|||||||

from frigate.stats import StatsEmitter, stats_init

|

from frigate.stats import StatsEmitter, stats_init

|

||||||

from frigate.storage import StorageMaintainer

|

from frigate.storage import StorageMaintainer

|

||||||

from frigate.timeline import TimelineProcessor

|

from frigate.timeline import TimelineProcessor

|

||||||

|

from frigate.types import CameraMetricsTypes, RecordMetricsTypes

|

||||||

from frigate.version import VERSION

|

from frigate.version import VERSION

|

||||||

from frigate.video import capture_camera, track_camera

|

from frigate.video import capture_camera, track_camera

|

||||||

from frigate.watchdog import FrigateWatchdog

|

from frigate.watchdog import FrigateWatchdog

|

||||||

from frigate.types import CameraMetricsTypes, RecordMetricsTypes

|

|

||||||

|

|

||||||

logger = logging.getLogger(__name__)

|

logger = logging.getLogger(__name__)

|

||||||

|

|

||||||

@ -68,7 +69,14 @@ class FrigateApp:

|

|||||||

os.environ[key] = value

|

os.environ[key] = value

|

||||||

|

|

||||||

def ensure_dirs(self) -> None:

|

def ensure_dirs(self) -> None:

|

||||||

for d in [CONFIG_DIR, RECORD_DIR, CLIPS_DIR, CACHE_DIR, MODEL_CACHE_DIR]:

|

for d in [

|

||||||

|

CONFIG_DIR,

|

||||||

|

RECORD_DIR,

|

||||||

|

CLIPS_DIR,

|

||||||

|

CACHE_DIR,

|

||||||

|

MODEL_CACHE_DIR,

|

||||||

|

EXPORT_DIR,

|

||||||

|

]:

|

||||||

if not os.path.exists(d) and not os.path.islink(d):

|

if not os.path.exists(d) and not os.path.islink(d):

|

||||||

logger.info(f"Creating directory: {d}")

|

logger.info(f"Creating directory: {d}")

|

||||||

os.makedirs(d)

|

os.makedirs(d)

|

||||||

@ -133,10 +141,10 @@ class FrigateApp:

|

|||||||

for log, level in self.config.logger.logs.items():

|

for log, level in self.config.logger.logs.items():

|

||||||

logging.getLogger(log).setLevel(level.value.upper())

|

logging.getLogger(log).setLevel(level.value.upper())

|

||||||

|

|

||||||

if not "werkzeug" in self.config.logger.logs:

|

if "werkzeug" not in self.config.logger.logs:

|

||||||

logging.getLogger("werkzeug").setLevel("ERROR")

|

logging.getLogger("werkzeug").setLevel("ERROR")

|

||||||

|

|

||||||

if not "ws4py" in self.config.logger.logs:

|

if "ws4py" not in self.config.logger.logs:

|

||||||

logging.getLogger("ws4py").setLevel("ERROR")

|

logging.getLogger("ws4py").setLevel("ERROR")

|

||||||

|

|

||||||

def init_queues(self) -> None:

|

def init_queues(self) -> None:

|

||||||

@ -294,7 +302,7 @@ class FrigateApp:

|

|||||||

def start_video_output_processor(self) -> None:

|

def start_video_output_processor(self) -> None:

|

||||||

output_processor = mp.Process(

|

output_processor = mp.Process(

|

||||||

target=output_frames,

|

target=output_frames,

|

||||||

name=f"output_processor",

|

name="output_processor",

|

||||||

args=(

|

args=(

|

||||||

self.config,

|

self.config,

|

||||||

self.video_output_queue,

|

self.video_output_queue,

|

||||||

@ -467,7 +475,7 @@ class FrigateApp:

|

|||||||

self.stop()

|

self.stop()

|

||||||

|

|

||||||

def stop(self) -> None:

|

def stop(self) -> None:

|

||||||

logger.info(f"Stopping...")

|

logger.info("Stopping...")

|

||||||

self.stop_event.set()

|

self.stop_event.set()

|

||||||

|

|

||||||

for detector in self.detectors.values():

|

for detector in self.detectors.values():

|

||||||

|

|||||||

@ -1,17 +1,14 @@

|

|||||||

"""Handle communication between Frigate and other applications."""

|

"""Handle communication between Frigate and other applications."""

|

||||||

|

|

||||||

import logging

|

import logging

|

||||||

|

from abc import ABC, abstractmethod

|

||||||

from typing import Any, Callable

|

from typing import Any, Callable

|

||||||

|

|

||||||

from abc import ABC, abstractmethod

|

|

||||||

|

|

||||||

from frigate.config import FrigateConfig

|

from frigate.config import FrigateConfig

|

||||||

from frigate.ptz import OnvifController, OnvifCommandEnum

|

from frigate.ptz import OnvifCommandEnum, OnvifController

|

||||||

from frigate.types import CameraMetricsTypes, RecordMetricsTypes

|

from frigate.types import CameraMetricsTypes, RecordMetricsTypes

|

||||||

from frigate.util import restart_frigate

|

from frigate.util import restart_frigate

|

||||||

|

|

||||||

|

|

||||||

logger = logging.getLogger(__name__)

|

logger = logging.getLogger(__name__)

|

||||||

|

|

||||||

|

|

||||||

@ -72,7 +69,7 @@ class Dispatcher:

|

|||||||

camera_name = topic.split("/")[-3]

|

camera_name = topic.split("/")[-3]

|

||||||

command = topic.split("/")[-2]

|

command = topic.split("/")[-2]

|

||||||

self._camera_settings_handlers[command](camera_name, payload)

|

self._camera_settings_handlers[command](camera_name, payload)

|

||||||

except IndexError as e:

|

except IndexError:

|

||||||

logger.error(f"Received invalid set command: {topic}")

|

logger.error(f"Received invalid set command: {topic}")

|

||||||

return

|

return

|

||||||

elif topic.endswith("ptz"):

|

elif topic.endswith("ptz"):

|

||||||

@ -80,7 +77,7 @@ class Dispatcher:

|

|||||||

# example /cam_name/ptz payload=MOVE_UP|MOVE_DOWN|STOP...

|

# example /cam_name/ptz payload=MOVE_UP|MOVE_DOWN|STOP...

|

||||||

camera_name = topic.split("/")[-2]

|

camera_name = topic.split("/")[-2]

|

||||||

self._on_ptz_command(camera_name, payload)

|

self._on_ptz_command(camera_name, payload)

|

||||||

except IndexError as e:

|

except IndexError:

|

||||||

logger.error(f"Received invalid ptz command: {topic}")

|

logger.error(f"Received invalid ptz command: {topic}")

|

||||||

return

|

return

|

||||||

elif topic == "restart":

|

elif topic == "restart":

|

||||||

@ -128,7 +125,7 @@ class Dispatcher:

|

|||||||

elif payload == "OFF":

|

elif payload == "OFF":

|

||||||

if self.camera_metrics[camera_name]["detection_enabled"].value:

|

if self.camera_metrics[camera_name]["detection_enabled"].value:

|

||||||

logger.error(

|

logger.error(

|

||||||

f"Turning off motion is not allowed when detection is enabled."

|

"Turning off motion is not allowed when detection is enabled."

|

||||||

)

|

)

|

||||||

return

|

return

|

||||||

|

|

||||||

@ -196,7 +193,7 @@ class Dispatcher:

|

|||||||

if payload == "ON":

|

if payload == "ON":

|

||||||

if not self.config.cameras[camera_name].record.enabled_in_config:

|

if not self.config.cameras[camera_name].record.enabled_in_config:

|

||||||

logger.error(

|

logger.error(

|

||||||

f"Recordings must be enabled in the config to be turned on via MQTT."

|

"Recordings must be enabled in the config to be turned on via MQTT."

|

||||||

)

|

)

|

||||||

return

|

return

|

||||||

|

|

||||||

|

|||||||

@ -1,6 +1,5 @@

|

|||||||

import logging

|

import logging

|

||||||

import threading

|

import threading

|

||||||

|

|

||||||

from typing import Any, Callable

|

from typing import Any, Callable

|

||||||

|

|

||||||

import paho.mqtt.client as mqtt

|

import paho.mqtt.client as mqtt

|

||||||

@ -8,7 +7,6 @@ import paho.mqtt.client as mqtt

|

|||||||

from frigate.comms.dispatcher import Communicator

|

from frigate.comms.dispatcher import Communicator

|

||||||

from frigate.config import FrigateConfig

|

from frigate.config import FrigateConfig

|

||||||

|

|

||||||

|

|

||||||

logger = logging.getLogger(__name__)

|

logger = logging.getLogger(__name__)

|

||||||

|

|

||||||

|

|

||||||

@ -177,10 +175,10 @@ class MqttClient(Communicator): # type: ignore[misc]

|

|||||||

f"{self.mqtt_config.topic_prefix}/restart", self.on_mqtt_command

|

f"{self.mqtt_config.topic_prefix}/restart", self.on_mqtt_command

|

||||||

)

|

)

|

||||||

|

|

||||||

if not self.mqtt_config.tls_ca_certs is None:

|

if self.mqtt_config.tls_ca_certs is not None:

|

||||||

if (

|

if (

|

||||||

not self.mqtt_config.tls_client_cert is None

|

self.mqtt_config.tls_client_cert is not None

|

||||||

and not self.mqtt_config.tls_client_key is None

|

and self.mqtt_config.tls_client_key is not None

|

||||||

):

|

):

|

||||||

self.client.tls_set(

|

self.client.tls_set(

|

||||||

self.mqtt_config.tls_ca_certs,

|

self.mqtt_config.tls_ca_certs,

|

||||||

@ -189,9 +187,9 @@ class MqttClient(Communicator): # type: ignore[misc]

|

|||||||

)

|

)

|

||||||

else:

|

else:

|

||||||

self.client.tls_set(self.mqtt_config.tls_ca_certs)

|

self.client.tls_set(self.mqtt_config.tls_ca_certs)

|

||||||

if not self.mqtt_config.tls_insecure is None:

|

if self.mqtt_config.tls_insecure is not None:

|

||||||

self.client.tls_insecure_set(self.mqtt_config.tls_insecure)

|

self.client.tls_insecure_set(self.mqtt_config.tls_insecure)

|

||||||

if not self.mqtt_config.user is None:

|

if self.mqtt_config.user is not None:

|

||||||

self.client.username_pw_set(

|

self.client.username_pw_set(

|

||||||

self.mqtt_config.user, password=self.mqtt_config.password

|

self.mqtt_config.user, password=self.mqtt_config.password

|

||||||

)

|

)

|

||||||

|

|||||||

@ -3,10 +3,9 @@

|

|||||||

import json

|

import json

|

||||||

import logging

|

import logging

|

||||||

import threading

|

import threading

|

||||||

|

|

||||||

from typing import Callable

|

from typing import Callable

|

||||||

|

|

||||||

from wsgiref.simple_server import make_server

|

from wsgiref.simple_server import make_server

|

||||||

|

|

||||||

from ws4py.server.wsgirefserver import (

|

from ws4py.server.wsgirefserver import (

|

||||||

WebSocketWSGIHandler,

|

WebSocketWSGIHandler,

|

||||||

WebSocketWSGIRequestHandler,

|

WebSocketWSGIRequestHandler,

|

||||||

@ -18,7 +17,6 @@ from ws4py.websocket import WebSocket

|

|||||||

from frigate.comms.dispatcher import Communicator

|

from frigate.comms.dispatcher import Communicator

|

||||||

from frigate.config import FrigateConfig

|

from frigate.config import FrigateConfig

|

||||||

|

|

||||||

|

|

||||||

logger = logging.getLogger(__name__)

|

logger = logging.getLogger(__name__)

|

||||||

|

|

||||||

|

|

||||||

@ -45,7 +43,7 @@ class WebSocketClient(Communicator): # type: ignore[misc]

|

|||||||

"topic": json_message.get("topic"),

|

"topic": json_message.get("topic"),

|

||||||

"payload": json_message.get("payload"),

|

"payload": json_message.get("payload"),

|

||||||

}

|

}

|

||||||

except Exception as e:

|

except Exception:

|

||||||

logger.warning(

|

logger.warning(

|

||||||

f"Unable to parse websocket message as valid json: {message.data.decode('utf-8')}"

|

f"Unable to parse websocket message as valid json: {message.data.decode('utf-8')}"

|

||||||

)

|

)

|

||||||

@ -82,7 +80,7 @@ class WebSocketClient(Communicator): # type: ignore[misc]

|

|||||||

"payload": payload,

|

"payload": payload,

|

||||||

}

|

}

|

||||||

)

|

)

|

||||||

except Exception as e:

|

except Exception:

|

||||||

# if the payload can't be decoded don't relay to clients

|

# if the payload can't be decoded don't relay to clients

|

||||||

logger.debug(f"payload for {topic} wasn't text. Skipping...")

|

logger.debug(f"payload for {topic} wasn't text. Skipping...")

|

||||||

return

|

return

|

||||||

|

|||||||

@ -8,26 +8,14 @@ from typing import Dict, List, Optional, Tuple, Union

|

|||||||

|

|

||||||

import matplotlib.pyplot as plt

|

import matplotlib.pyplot as plt

|

||||||

import numpy as np

|

import numpy as np

|

||||||

import yaml

|

from pydantic import BaseModel, Extra, Field, parse_obj_as, validator

|

||||||

from pydantic import BaseModel, Extra, Field, validator, parse_obj_as

|

|

||||||

from pydantic.fields import PrivateAttr

|

from pydantic.fields import PrivateAttr

|

||||||

|

|

||||||

from frigate.const import (

|

from frigate.const import CACHE_DIR, DEFAULT_DB_PATH, REGEX_CAMERA_NAME, YAML_EXT

|

||||||

CACHE_DIR,

|

from frigate.detectors import DetectorConfig, ModelConfig

|

||||||

DEFAULT_DB_PATH,

|

from frigate.detectors.detector_config import InputTensorEnum # noqa: F401

|

||||||

REGEX_CAMERA_NAME,

|

from frigate.detectors.detector_config import PixelFormatEnum # noqa: F401

|

||||||

YAML_EXT,

|

|

||||||

)

|

|

||||||

from frigate.detectors.detector_config import BaseDetectorConfig

|

from frigate.detectors.detector_config import BaseDetectorConfig

|

||||||

from frigate.plus import PlusApi

|

|

||||||

from frigate.util import (

|

|

||||||

create_mask,

|

|

||||||

deep_merge,

|

|

||||||

get_ffmpeg_arg_list,

|

|

||||||

escape_special_characters,

|

|

||||||

load_config_with_no_duplicates,

|

|

||||||

load_labels,

|

|

||||||

)

|

|

||||||

from frigate.ffmpeg_presets import (

|

from frigate.ffmpeg_presets import (

|

||||||

parse_preset_hardware_acceleration_decode,

|

parse_preset_hardware_acceleration_decode,

|

||||||

parse_preset_hardware_acceleration_scale,

|

parse_preset_hardware_acceleration_scale,

|

||||||

@ -35,14 +23,14 @@ from frigate.ffmpeg_presets import (

|

|||||||

parse_preset_output_record,

|

parse_preset_output_record,

|

||||||

parse_preset_output_rtmp,

|

parse_preset_output_rtmp,

|

||||||

)

|

)

|

||||||

from frigate.detectors import (

|

from frigate.plus import PlusApi

|

||||||

PixelFormatEnum,

|

from frigate.util import (

|

||||||

InputTensorEnum,

|

create_mask,

|

||||||

ModelConfig,

|

deep_merge,

|

||||||

DetectorConfig,

|

escape_special_characters,

|

||||||

|

get_ffmpeg_arg_list,

|

||||||

|

load_config_with_no_duplicates,

|

||||||

)

|

)

|

||||||

from frigate.version import VERSION

|

|

||||||

|

|

||||||

|

|

||||||

logger = logging.getLogger(__name__)

|

logger = logging.getLogger(__name__)

|

||||||

|

|

||||||

@ -487,7 +475,7 @@ class CameraFfmpegConfig(FfmpegConfig):

|

|||||||

if len(roles) > len(roles_set):

|

if len(roles) > len(roles_set):

|

||||||

raise ValueError("Each input role may only be used once.")

|

raise ValueError("Each input role may only be used once.")

|

||||||

|

|

||||||

if not "detect" in roles:

|

if "detect" not in roles:

|

||||||

raise ValueError("The detect role is required.")

|

raise ValueError("The detect role is required.")

|

||||||

|

|

||||||

return v

|

return v

|

||||||

@ -776,12 +764,12 @@ def verify_config_roles(camera_config: CameraConfig) -> None:

|

|||||||

set([r for i in camera_config.ffmpeg.inputs for r in i.roles])

|

set([r for i in camera_config.ffmpeg.inputs for r in i.roles])

|

||||||

)

|

)

|

||||||

|

|

||||||

if camera_config.record.enabled and not "record" in assigned_roles:

|

if camera_config.record.enabled and "record" not in assigned_roles:

|

||||||

raise ValueError(

|

raise ValueError(

|

||||||

f"Camera {camera_config.name} has record enabled, but record is not assigned to an input."

|

f"Camera {camera_config.name} has record enabled, but record is not assigned to an input."

|

||||||

)

|

)

|

||||||

|

|

||||||

if camera_config.rtmp.enabled and not "rtmp" in assigned_roles:

|

if camera_config.rtmp.enabled and "rtmp" not in assigned_roles:

|

||||||

raise ValueError(

|

raise ValueError(

|

||||||

f"Camera {camera_config.name} has rtmp enabled, but rtmp is not assigned to an input."

|

f"Camera {camera_config.name} has rtmp enabled, but rtmp is not assigned to an input."

|

||||||

)

|

)

|

||||||

@ -1062,7 +1050,7 @@ class FrigateConfig(FrigateBaseModel):

|

|||||||

config.model.dict(exclude_unset=True),

|

config.model.dict(exclude_unset=True),

|

||||||

)

|

)

|

||||||

|

|

||||||

if not "path" in merged_model:

|

if "path" not in merged_model:

|

||||||

if detector_config.type == "cpu":

|

if detector_config.type == "cpu":

|

||||||

merged_model["path"] = "/cpu_model.tflite"

|

merged_model["path"] = "/cpu_model.tflite"

|

||||||

elif detector_config.type == "edgetpu":

|

elif detector_config.type == "edgetpu":

|

||||||

|

|||||||

@ -4,6 +4,7 @@ MODEL_CACHE_DIR = f"{CONFIG_DIR}/model_cache"

|

|||||||

BASE_DIR = "/media/frigate"

|

BASE_DIR = "/media/frigate"

|

||||||

CLIPS_DIR = f"{BASE_DIR}/clips"

|

CLIPS_DIR = f"{BASE_DIR}/clips"

|

||||||

RECORD_DIR = f"{BASE_DIR}/recordings"

|

RECORD_DIR = f"{BASE_DIR}/recordings"

|

||||||

|

EXPORT_DIR = f"{BASE_DIR}/exports"

|

||||||

BIRDSEYE_PIPE = "/tmp/cache/birdseye"

|

BIRDSEYE_PIPE = "/tmp/cache/birdseye"

|

||||||

CACHE_DIR = "/tmp/cache"

|

CACHE_DIR = "/tmp/cache"

|

||||||

YAML_EXT = (".yaml", ".yml")

|

YAML_EXT = (".yaml", ".yml")

|

||||||

@ -13,9 +14,9 @@ BTBN_PATH = "/usr/lib/btbn-ffmpeg"

|

|||||||

|

|

||||||

# Regex Consts

|

# Regex Consts

|

||||||

|

|

||||||

REGEX_CAMERA_NAME = "^[a-zA-Z0-9_-]+$"

|

REGEX_CAMERA_NAME = r"^[a-zA-Z0-9_-]+$"

|

||||||

REGEX_RTSP_CAMERA_USER_PASS = ":\/\/[a-zA-Z0-9_-]+:[\S]+@"

|

REGEX_RTSP_CAMERA_USER_PASS = r":\/\/[a-zA-Z0-9_-]+:[\S]+@"

|

||||||

REGEX_HTTP_CAMERA_USER_PASS = "user=[a-zA-Z0-9_-]+&password=[\S]+"

|

REGEX_HTTP_CAMERA_USER_PASS = r"user=[a-zA-Z0-9_-]+&password=[\S]+"

|

||||||

|

|

||||||

# Known Driver Names

|

# Known Driver Names

|

||||||

|

|

||||||

@ -28,3 +29,4 @@ DRIVER_INTEL_iHD = "iHD"

|

|||||||

|

|

||||||

MAX_SEGMENT_DURATION = 600

|

MAX_SEGMENT_DURATION = 600

|

||||||

SECONDS_IN_DAY = 60 * 60 * 24

|

SECONDS_IN_DAY = 60 * 60 * 24

|

||||||

|

MAX_PLAYLIST_SECONDS = 7200 # support 2 hour segments for a single playlist to account for cameras with inconsistent segment times

|

||||||

|

|||||||

@ -1,13 +1,7 @@

|

|||||||

import logging

|

import logging

|

||||||

|

|

||||||

from .detection_api import DetectionApi

|

from .detector_config import InputTensorEnum, ModelConfig, PixelFormatEnum # noqa: F401

|

||||||

from .detector_config import (

|

from .detector_types import DetectorConfig, DetectorTypeEnum, api_types # noqa: F401

|

||||||

PixelFormatEnum,

|

|

||||||

InputTensorEnum,

|

|

||||||

ModelConfig,

|

|

||||||

)

|

|

||||||

from .detector_types import DetectorTypeEnum, api_types, DetectorConfig

|

|

||||||

|

|

||||||

|

|

||||||

logger = logging.getLogger(__name__)

|

logger = logging.getLogger(__name__)

|

||||||

|

|

||||||

|

|||||||

@ -1,7 +1,6 @@

|

|||||||

import logging

|

import logging

|

||||||

from abc import ABC, abstractmethod

|

from abc import ABC, abstractmethod

|

||||||

|

|

||||||

|

|

||||||

logger = logging.getLogger(__name__)

|

logger = logging.getLogger(__name__)

|

||||||

|

|

||||||

|

|

||||||

|

|||||||

@ -1,20 +1,18 @@

|

|||||||

import hashlib

|

import hashlib

|

||||||

import json

|

import json

|

||||||

import logging

|

import logging

|

||||||

from enum import Enum

|

|

||||||

import os

|

import os

|

||||||

from typing import Dict, List, Optional, Tuple, Union, Literal

|

from enum import Enum

|

||||||

|

from typing import Dict, Optional, Tuple

|

||||||

|

|

||||||

|

|

||||||

import requests

|

|

||||||

import matplotlib.pyplot as plt

|

import matplotlib.pyplot as plt

|

||||||

from pydantic import BaseModel, Extra, Field, validator

|

import requests

|

||||||

|

from pydantic import BaseModel, Extra, Field

|

||||||

from pydantic.fields import PrivateAttr

|

from pydantic.fields import PrivateAttr

|

||||||

|

|

||||||

from frigate.plus import PlusApi

|

from frigate.plus import PlusApi

|

||||||

|

|

||||||

from frigate.util import load_labels

|

from frigate.util import load_labels

|

||||||

|

|

||||||

|

|

||||||

logger = logging.getLogger(__name__)

|

logger = logging.getLogger(__name__)

|

||||||

|

|

||||||

|

|

||||||

|

|||||||

@ -1,16 +1,16 @@

|

|||||||

import logging

|

|

||||||

import importlib

|

import importlib

|

||||||

|

import logging

|

||||||

import pkgutil

|

import pkgutil

|

||||||

from typing import Union

|

|

||||||

from typing_extensions import Annotated

|

|

||||||

from enum import Enum

|

from enum import Enum

|

||||||

|

from typing import Union

|

||||||

|

|

||||||

from pydantic import Field

|

from pydantic import Field

|

||||||

|

from typing_extensions import Annotated

|

||||||

|

|

||||||

from . import plugins

|

from . import plugins

|

||||||

from .detection_api import DetectionApi

|

from .detection_api import DetectionApi

|

||||||

from .detector_config import BaseDetectorConfig

|

from .detector_config import BaseDetectorConfig

|

||||||

|

|

||||||

|

|

||||||

logger = logging.getLogger(__name__)

|

logger = logging.getLogger(__name__)

|

||||||

|

|

||||||

|

|

||||||

|

|||||||

@ -1,10 +1,11 @@

|

|||||||

import logging

|

import logging

|

||||||

|

|

||||||

import numpy as np

|

import numpy as np

|

||||||

|

from pydantic import Field

|

||||||

|

from typing_extensions import Literal

|

||||||

|

|

||||||

from frigate.detectors.detection_api import DetectionApi

|

from frigate.detectors.detection_api import DetectionApi

|

||||||

from frigate.detectors.detector_config import BaseDetectorConfig

|

from frigate.detectors.detector_config import BaseDetectorConfig

|

||||||

from typing_extensions import Literal

|

|

||||||

from pydantic import Extra, Field

|

|

||||||

|

|

||||||

try:

|

try:

|

||||||

from tflite_runtime.interpreter import Interpreter

|

from tflite_runtime.interpreter import Interpreter

|

||||||

|

|||||||

@ -1,14 +1,14 @@

|

|||||||

|

import io

|

||||||

import logging

|

import logging

|

||||||

|

|

||||||

import numpy as np

|

import numpy as np

|

||||||

import requests

|

import requests

|

||||||

import io

|

from PIL import Image

|

||||||

|

from pydantic import Field

|

||||||

|

from typing_extensions import Literal

|

||||||

|

|

||||||

from frigate.detectors.detection_api import DetectionApi

|

from frigate.detectors.detection_api import DetectionApi

|

||||||

from frigate.detectors.detector_config import BaseDetectorConfig

|

from frigate.detectors.detector_config import BaseDetectorConfig

|

||||||

from typing_extensions import Literal

|

|

||||||

from pydantic import Extra, Field

|

|

||||||

from PIL import Image

|

|

||||||

|

|

||||||

|

|

||||||

logger = logging.getLogger(__name__)

|

logger = logging.getLogger(__name__)

|

||||||

|

|

||||||

@ -64,11 +64,11 @@ class DeepStack(DetectionApi):

|

|||||||

for i, detection in enumerate(response_json.get("predictions")):

|

for i, detection in enumerate(response_json.get("predictions")):

|

||||||

logger.debug(f"Response: {detection}")

|

logger.debug(f"Response: {detection}")

|

||||||

if detection["confidence"] < 0.4:

|

if detection["confidence"] < 0.4:

|

||||||

logger.debug(f"Break due to confidence < 0.4")

|

logger.debug("Break due to confidence < 0.4")

|

||||||

break

|

break

|

||||||

label = self.get_label_index(detection["label"])

|

label = self.get_label_index(detection["label"])

|

||||||

if label < 0:

|

if label < 0:

|

||||||

logger.debug(f"Break due to unknown label")

|

logger.debug("Break due to unknown label")

|

||||||

break

|

break

|

||||||

detections[i] = [

|

detections[i] = [

|

||||||

label,

|

label,

|

||||||

|

|||||||

@ -1,10 +1,11 @@

|

|||||||

import logging

|

import logging

|

||||||

|

|

||||||

import numpy as np

|

import numpy as np

|

||||||

|

from pydantic import Field

|

||||||

|

from typing_extensions import Literal

|

||||||

|

|

||||||

from frigate.detectors.detection_api import DetectionApi

|

from frigate.detectors.detection_api import DetectionApi

|

||||||

from frigate.detectors.detector_config import BaseDetectorConfig

|

from frigate.detectors.detector_config import BaseDetectorConfig

|

||||||

from typing_extensions import Literal

|

|

||||||

from pydantic import Extra, Field

|

|

||||||

|

|

||||||

try:

|

try:

|

||||||

from tflite_runtime.interpreter import Interpreter, load_delegate

|

from tflite_runtime.interpreter import Interpreter, load_delegate

|

||||||

|

|||||||

@ -1,12 +1,12 @@

|

|||||||

import logging

|

import logging

|

||||||

|

|

||||||

import numpy as np

|

import numpy as np

|

||||||

import openvino.runtime as ov

|

import openvino.runtime as ov

|

||||||

|

from pydantic import Field

|

||||||

|

from typing_extensions import Literal

|

||||||

|

|

||||||

from frigate.detectors.detection_api import DetectionApi

|

from frigate.detectors.detection_api import DetectionApi

|

||||||

from frigate.detectors.detector_config import BaseDetectorConfig, ModelTypeEnum

|

from frigate.detectors.detector_config import BaseDetectorConfig, ModelTypeEnum

|

||||||

from typing_extensions import Literal

|

|

||||||

from pydantic import Extra, Field

|

|

||||||

|

|

||||||

|

|

||||||

logger = logging.getLogger(__name__)

|

logger = logging.getLogger(__name__)

|

||||||

|

|

||||||

@ -41,7 +41,7 @@ class OvDetector(DetectionApi):

|

|||||||

tensor_shape = self.interpreter.output(self.output_indexes).shape

|

tensor_shape = self.interpreter.output(self.output_indexes).shape

|

||||||

logger.info(f"Model Output-{self.output_indexes} Shape: {tensor_shape}")

|

logger.info(f"Model Output-{self.output_indexes} Shape: {tensor_shape}")

|

||||||

self.output_indexes += 1

|

self.output_indexes += 1

|

||||||

except:

|

except Exception:

|

||||||

logger.info(f"Model has {self.output_indexes} Output Tensors")

|

logger.info(f"Model has {self.output_indexes} Output Tensors")

|

||||||

break

|

break

|

||||||

if self.ov_model_type == ModelTypeEnum.yolox:

|

if self.ov_model_type == ModelTypeEnum.yolox:

|

||||||

|

|||||||

@ -1,6 +1,6 @@

|

|||||||

|

import ctypes

|

||||||

import logging

|

import logging

|

||||||

|

|

||||||

import ctypes

|

|

||||||

import numpy as np

|

import numpy as np

|

||||||

|

|

||||||

try:

|

try:

|

||||||

@ -8,13 +8,14 @@ try:

|

|||||||

from cuda import cuda

|

from cuda import cuda

|

||||||

|

|

||||||

TRT_SUPPORT = True

|

TRT_SUPPORT = True

|

||||||

except ModuleNotFoundError as e:

|

except ModuleNotFoundError:

|

||||||

TRT_SUPPORT = False

|

TRT_SUPPORT = False

|

||||||

|

|

||||||

|

from pydantic import Field

|

||||||

|

from typing_extensions import Literal

|

||||||

|

|

||||||

from frigate.detectors.detection_api import DetectionApi

|

from frigate.detectors.detection_api import DetectionApi

|

||||||

from frigate.detectors.detector_config import BaseDetectorConfig

|

from frigate.detectors.detector_config import BaseDetectorConfig

|

||||||

from typing_extensions import Literal

|

|

||||||

from pydantic import Field

|

|

||||||

|

|

||||||

logger = logging.getLogger(__name__)

|

logger = logging.getLogger(__name__)

|

||||||

|

|

||||||

@ -172,7 +173,7 @@ class TensorRtDetector(DetectionApi):

|

|||||||

if not self.context.execute_async_v2(

|

if not self.context.execute_async_v2(

|

||||||

bindings=self.bindings, stream_handle=self.stream

|

bindings=self.bindings, stream_handle=self.stream

|

||||||

):

|

):

|

||||||

logger.warn(f"Execute returned false")

|

logger.warn("Execute returned false")

|

||||||

|

|

||||||

# Transfer predictions back from the GPU.

|

# Transfer predictions back from the GPU.

|

||||||

[

|

[

|

||||||

|

|||||||

@ -4,17 +4,13 @@ import datetime

|

|||||||

import logging

|

import logging

|

||||||

import os

|

import os

|

||||||

import threading

|

import threading

|

||||||

|

from multiprocessing.synchronize import Event as MpEvent

|

||||||

from pathlib import Path

|

from pathlib import Path

|

||||||

|

|

||||||

from peewee import fn

|

|

||||||

|

|

||||||

from frigate.config import FrigateConfig

|

from frigate.config import FrigateConfig

|

||||||

from frigate.const import CLIPS_DIR

|

from frigate.const import CLIPS_DIR

|

||||||

from frigate.models import Event

|

from frigate.models import Event

|

||||||

|

|

||||||

from multiprocessing.synchronize import Event as MpEvent

|

|

||||||

|

|

||||||

logger = logging.getLogger(__name__)

|

logger = logging.getLogger(__name__)

|

||||||

|

|

||||||

|

|

||||||

@ -45,9 +41,9 @@ class EventCleanup(threading.Thread):

|

|||||||

)

|

)

|

||||||

|

|

||||||

# loop over object types in db

|

# loop over object types in db

|

||||||

for l in distinct_labels:

|

for event in distinct_labels:

|

||||||

# get expiration time for this label

|

# get expiration time for this label

|

||||||

expire_days = retain_config.objects.get(l.label, retain_config.default)

|

expire_days = retain_config.objects.get(event.label, retain_config.default)

|

||||||

expire_after = (

|

expire_after = (

|

||||||

datetime.datetime.now() - datetime.timedelta(days=expire_days)

|

datetime.datetime.now() - datetime.timedelta(days=expire_days)

|

||||||

).timestamp()

|

).timestamp()

|

||||||

@ -55,8 +51,8 @@ class EventCleanup(threading.Thread):

|

|||||||

expired_events = Event.select().where(

|

expired_events = Event.select().where(

|

||||||

Event.camera.not_in(self.camera_keys),

|

Event.camera.not_in(self.camera_keys),

|

||||||

Event.start_time < expire_after,

|

Event.start_time < expire_after,

|

||||||

Event.label == l.label,

|

Event.label == event.label,

|

||||||

Event.retain_indefinitely == False,

|

Event.retain_indefinitely is False,

|

||||||

)

|

)

|

||||||

# delete the media from disk

|

# delete the media from disk

|

||||||

for event in expired_events:

|

for event in expired_events:

|

||||||

@ -75,8 +71,8 @@ class EventCleanup(threading.Thread):

|

|||||||

update_query = Event.update(update_params).where(

|

update_query = Event.update(update_params).where(

|

||||||

Event.camera.not_in(self.camera_keys),

|

Event.camera.not_in(self.camera_keys),

|

||||||

Event.start_time < expire_after,

|

Event.start_time < expire_after,

|

||||||

Event.label == l.label,

|

Event.label == event.label,

|

||||||

Event.retain_indefinitely == False,

|

Event.retain_indefinitely is False,

|

||||||

)

|

)

|

||||||

update_query.execute()

|

update_query.execute()

|

||||||

|

|

||||||

@ -92,9 +88,11 @@ class EventCleanup(threading.Thread):

|

|||||||

)

|

)

|

||||||

|

|

||||||

# loop over object types in db

|

# loop over object types in db

|

||||||

for l in distinct_labels:

|

for event in distinct_labels:

|

||||||

# get expiration time for this label

|

# get expiration time for this label

|

||||||

expire_days = retain_config.objects.get(l.label, retain_config.default)

|

expire_days = retain_config.objects.get(

|

||||||

|

event.label, retain_config.default

|

||||||

|

)

|

||||||

expire_after = (

|

expire_after = (

|

||||||

datetime.datetime.now() - datetime.timedelta(days=expire_days)

|

datetime.datetime.now() - datetime.timedelta(days=expire_days)

|

||||||

).timestamp()

|

).timestamp()

|

||||||

@ -102,8 +100,8 @@ class EventCleanup(threading.Thread):

|

|||||||

expired_events = Event.select().where(

|

expired_events = Event.select().where(

|

||||||

Event.camera == name,

|

Event.camera == name,

|

||||||

Event.start_time < expire_after,

|

Event.start_time < expire_after,

|

||||||

Event.label == l.label,

|

Event.label == event.label,

|

||||||

Event.retain_indefinitely == False,

|

Event.retain_indefinitely is False,

|

||||||

)

|

)

|

||||||

# delete the grabbed clips from disk

|

# delete the grabbed clips from disk

|

||||||

for event in expired_events:

|

for event in expired_events:

|

||||||

@ -121,8 +119,8 @@ class EventCleanup(threading.Thread):

|

|||||||

update_query = Event.update(update_params).where(

|

update_query = Event.update(update_params).where(

|

||||||

Event.camera == name,

|

Event.camera == name,

|

||||||

Event.start_time < expire_after,

|

Event.start_time < expire_after,

|

||||||

Event.label == l.label,

|

Event.label == event.label,

|

||||||

Event.retain_indefinitely == False,

|

Event.retain_indefinitely is False,

|

||||||

)

|

)

|

||||||

update_query.execute()

|

update_query.execute()

|

||||||

|

|

||||||

@ -131,9 +129,9 @@ class EventCleanup(threading.Thread):

|

|||||||

select id,

|

select id,

|

||||||

label,

|

label,

|

||||||

camera,

|

camera,

|

||||||

has_snapshot,

|

has_snapshot,

|

||||||

has_clip,

|

has_clip,

|

||||||

row_number() over (

|

row_number() over (

|

||||||

partition by label, camera, round(start_time/5,0)*5

|

partition by label, camera, round(start_time/5,0)*5

|

||||||

order by end_time-start_time desc

|

order by end_time-start_time desc

|

||||||

) as copy_number

|

) as copy_number

|

||||||

@ -169,8 +167,8 @@ class EventCleanup(threading.Thread):

|

|||||||

|

|

||||||

# drop events from db where has_clip and has_snapshot are false

|

# drop events from db where has_clip and has_snapshot are false

|

||||||

delete_query = Event.delete().where(

|

delete_query = Event.delete().where(

|

||||||

Event.has_clip == False, Event.has_snapshot == False

|

Event.has_clip is False, Event.has_snapshot is False

|

||||||

)

|

)

|

||||||

delete_query.execute()

|

delete_query.execute()

|

||||||

|

|

||||||

logger.info(f"Exiting event cleanup...")

|

logger.info("Exiting event cleanup...")

|

||||||

|

|||||||

@ -1,17 +1,15 @@

|

|||||||

"""Handle external events created by the user."""

|

"""Handle external events created by the user."""

|

||||||

|

|

||||||

import base64

|

import base64

|

||||||

import cv2

|

|

||||||

import datetime

|

import datetime

|

||||||

import glob

|

|

||||||

import logging

|

import logging

|

||||||

import os

|

import os

|

||||||

import random

|

import random

|

||||||

import string

|

import string

|

||||||

|

from multiprocessing.queues import Queue

|

||||||

from typing import Optional

|

from typing import Optional

|

||||||

|

|

||||||

from multiprocessing.queues import Queue

|

import cv2

|

||||||

|

|

||||||

from frigate.config import CameraConfig, FrigateConfig

|

from frigate.config import CameraConfig, FrigateConfig

|

||||||

from frigate.const import CLIPS_DIR

|

from frigate.const import CLIPS_DIR

|

||||||

|

|||||||

@ -2,20 +2,16 @@ import datetime

|

|||||||

import logging

|

import logging

|

||||||

import queue

|

import queue

|

||||||

import threading

|

import threading

|

||||||

|

|

||||||

from enum import Enum

|

from enum import Enum

|

||||||

|

from multiprocessing.queues import Queue

|

||||||

from peewee import fn

|

from multiprocessing.synchronize import Event as MpEvent

|

||||||

|

from typing import Dict

|

||||||

|

|

||||||

from frigate.config import EventsConfig, FrigateConfig

|

from frigate.config import EventsConfig, FrigateConfig

|

||||||

from frigate.models import Event

|

from frigate.models import Event

|

||||||

from frigate.types import CameraMetricsTypes

|

from frigate.types import CameraMetricsTypes

|

||||||

from frigate.util import to_relative_box

|

from frigate.util import to_relative_box

|

||||||

|

|

||||||

from multiprocessing.queues import Queue

|

|

||||||

from multiprocessing.synchronize import Event as MpEvent

|

|

||||||

from typing import Dict

|

|

||||||

|

|

||||||

logger = logging.getLogger(__name__)

|

logger = logging.getLogger(__name__)

|

||||||

|

|

||||||

|

|

||||||

@ -65,7 +61,7 @@ class EventProcessor(threading.Thread):

|

|||||||

def run(self) -> None:

|

def run(self) -> None:

|

||||||

# set an end_time on events without an end_time on startup

|

# set an end_time on events without an end_time on startup

|

||||||

Event.update(end_time=Event.start_time + 30).where(

|

Event.update(end_time=Event.start_time + 30).where(

|

||||||

Event.end_time == None

|

Event.end_time is None

|

||||||

).execute()

|

).execute()

|

||||||

|

|

||||||

while not self.stop_event.is_set():

|

while not self.stop_event.is_set():

|

||||||

@ -99,9 +95,9 @@ class EventProcessor(threading.Thread):

|

|||||||

|

|

||||||

# set an end_time on events without an end_time before exiting

|

# set an end_time on events without an end_time before exiting

|

||||||

Event.update(end_time=datetime.datetime.now().timestamp()).where(

|

Event.update(end_time=datetime.datetime.now().timestamp()).where(

|

||||||

Event.end_time == None

|

Event.end_time is None

|

||||||

).execute()

|

).execute()

|

||||||

logger.info(f"Exiting event processor...")

|

logger.info("Exiting event processor...")

|

||||||

|

|

||||||

def handle_object_detection(

|

def handle_object_detection(

|

||||||

self,

|

self,

|

||||||

|

|||||||

@ -2,13 +2,12 @@

|

|||||||

|

|

||||||

import logging

|

import logging

|

||||||

import os

|

import os

|

||||||

|

from enum import Enum

|

||||||

from typing import Any

|

from typing import Any

|

||||||

|

|

||||||

from frigate.version import VERSION

|

|

||||||

from frigate.const import BTBN_PATH

|

from frigate.const import BTBN_PATH

|

||||||

from frigate.util import vainfo_hwaccel

|

from frigate.util import vainfo_hwaccel

|

||||||

|

from frigate.version import VERSION

|

||||||

|

|

||||||