mirror of

https://github.com/blakeblackshear/frigate.git

synced 2026-02-05 10:45:21 +03:00

Merge remote-tracking branch 'origin/dev' into prometheus-metrics

This commit is contained in:

commit

03222bff1e

@ -52,7 +52,9 @@

|

|||||||

"mikestead.dotenv",

|

"mikestead.dotenv",

|

||||||

"csstools.postcss",

|

"csstools.postcss",

|

||||||

"blanu.vscode-styled-jsx",

|

"blanu.vscode-styled-jsx",

|

||||||

"bradlc.vscode-tailwindcss"

|

"bradlc.vscode-tailwindcss",

|

||||||

|

"ms-python.isort",

|

||||||

|

"charliermarsh.ruff"

|

||||||

],

|

],

|

||||||

"settings": {

|

"settings": {

|

||||||

"remote.autoForwardPorts": false,

|

"remote.autoForwardPorts": false,

|

||||||

@ -68,6 +70,7 @@

|

|||||||

"python.testing.unittestArgs": ["-v", "-s", "./frigate/test"],

|

"python.testing.unittestArgs": ["-v", "-s", "./frigate/test"],

|

||||||

"files.trimTrailingWhitespace": true,

|

"files.trimTrailingWhitespace": true,

|

||||||

"eslint.workingDirectories": ["./web"],

|

"eslint.workingDirectories": ["./web"],

|

||||||

|

"isort.args": ["--settings-path=./pyproject.toml"],

|

||||||

"[python]": {

|

"[python]": {

|

||||||

"editor.defaultFormatter": "ms-python.black-formatter",

|

"editor.defaultFormatter": "ms-python.black-formatter",

|

||||||

"editor.formatOnSave": true

|

"editor.formatOnSave": true

|

||||||

|

|||||||

@ -2,6 +2,12 @@

|

|||||||

|

|

||||||

set -euxo pipefail

|

set -euxo pipefail

|

||||||

|

|

||||||

|

# Cleanup the old github host key

|

||||||

|

sed -i -e '/AAAAB3NzaC1yc2EAAAABIwAAAQEAq2A7hRGmdnm9tUDbO9IDSwBK6TbQa+PXYPCPy6rbTrTtw7PHkccKrpp0yVhp5HdEIcKr6pLlVDBfOLX9QUsyCOV0wzfjIJNlGEYsdlLJizHhbn2mUjvSAHQqZETYP81eFzLQNnPHt4EVVUh7VfDESU84KezmD5QlWpXLmvU31\/yMf+Se8xhHTvKSCZIFImWwoG6mbUoWf9nzpIoaSjB+weqqUUmpaaasXVal72J+UX2B+2RPW3RcT0eOzQgqlJL3RKrTJvdsjE3JEAvGq3lGHSZXy28G3skua2SmVi\/w4yCE6gbODqnTWlg7+wC604ydGXA8VJiS5ap43JXiUFFAaQ==/d' ~/.ssh/known_hosts

|

||||||

|

# Add new github host key

|

||||||

|

curl -L https://api.github.com/meta | jq -r '.ssh_keys | .[]' | \

|

||||||

|

sed -e 's/^/github.com /' >> ~/.ssh/known_hosts

|

||||||

|

|

||||||

# Frigate normal container runs as root, so it have permission to create

|

# Frigate normal container runs as root, so it have permission to create

|

||||||

# the folders. But the devcontainer runs as the host user, so we need to

|

# the folders. But the devcontainer runs as the host user, so we need to

|

||||||

# create the folders and give the host user permission to write to them.

|

# create the folders and give the host user permission to write to them.

|

||||||

|

|||||||

16

.github/workflows/pull_request.yml

vendored

16

.github/workflows/pull_request.yml

vendored

@ -65,16 +65,22 @@ jobs:

|

|||||||

- name: Check out the repository

|

- name: Check out the repository

|

||||||

uses: actions/checkout@v3

|

uses: actions/checkout@v3

|

||||||

- name: Set up Python ${{ env.DEFAULT_PYTHON }}

|

- name: Set up Python ${{ env.DEFAULT_PYTHON }}

|

||||||

uses: actions/setup-python@v4.6.0

|

uses: actions/setup-python@v4.6.1

|

||||||

with:

|

with:

|

||||||

python-version: ${{ env.DEFAULT_PYTHON }}

|

python-version: ${{ env.DEFAULT_PYTHON }}

|

||||||

- name: Install requirements

|

- name: Install requirements

|

||||||

run: |

|

run: |

|

||||||

pip install pip

|

python3 -m pip install -U pip

|

||||||

pip install -r requirements-dev.txt

|

python3 -m pip install -r requirements-dev.txt

|

||||||

- name: Lint

|

- name: Check black

|

||||||

run: |

|

run: |

|

||||||

python3 -m black frigate --check

|

black --check --diff frigate migrations docker *.py

|

||||||

|

- name: Check isort

|

||||||

|

run: |

|

||||||

|

isort --check --diff frigate migrations docker *.py

|

||||||

|

- name: Check ruff

|

||||||

|

run: |

|

||||||

|

ruff check frigate migrations docker *.py

|

||||||

|

|

||||||

python_tests:

|

python_tests:

|

||||||

runs-on: ubuntu-latest

|

runs-on: ubuntu-latest

|

||||||

|

|||||||

@ -27,7 +27,7 @@ RUN --mount=type=tmpfs,target=/tmp --mount=type=tmpfs,target=/var/cache/apt \

|

|||||||

FROM wget AS go2rtc

|

FROM wget AS go2rtc

|

||||||

ARG TARGETARCH

|

ARG TARGETARCH

|

||||||

WORKDIR /rootfs/usr/local/go2rtc/bin

|

WORKDIR /rootfs/usr/local/go2rtc/bin

|

||||||

RUN wget -qO go2rtc "https://github.com/AlexxIT/go2rtc/releases/download/v1.2.0/go2rtc_linux_${TARGETARCH}" \

|

RUN wget -qO go2rtc "https://github.com/AlexxIT/go2rtc/releases/download/v1.5.0/go2rtc_linux_${TARGETARCH}" \

|

||||||

&& chmod +x go2rtc

|

&& chmod +x go2rtc

|

||||||

|

|

||||||

|

|

||||||

@ -227,8 +227,8 @@ CMD ["sleep", "infinity"]

|

|||||||

|

|

||||||

|

|

||||||

# Frigate web build

|

# Frigate web build

|

||||||

# force this to run on amd64 because QEMU is painfully slow

|

# This should be architecture agnostic, so speed up the build on multiarch by not using QEMU.

|

||||||

FROM --platform=linux/amd64 node:16 AS web-build

|

FROM --platform=$BUILDPLATFORM node:16 AS web-build

|

||||||

|

|

||||||

WORKDIR /work

|

WORKDIR /work

|

||||||

COPY web/package.json web/package-lock.json ./

|

COPY web/package.json web/package-lock.json ./

|

||||||

|

|||||||

12

benchmark.py

12

benchmark.py

@ -1,11 +1,11 @@

|

|||||||

import os

|

|

||||||

from statistics import mean

|

|

||||||

import multiprocessing as mp

|

|

||||||

import numpy as np

|

|

||||||

import datetime

|

import datetime

|

||||||

|

import multiprocessing as mp

|

||||||

|

from statistics import mean

|

||||||

|

|

||||||

|

import numpy as np

|

||||||

|

|

||||||

from frigate.config import DetectorTypeEnum

|

from frigate.config import DetectorTypeEnum

|

||||||

from frigate.object_detection import (

|

from frigate.object_detection import (

|

||||||

LocalObjectDetector,

|

|

||||||

ObjectDetectProcess,

|

ObjectDetectProcess,

|

||||||

RemoteObjectDetector,

|

RemoteObjectDetector,

|

||||||

load_labels,

|

load_labels,

|

||||||

@ -53,7 +53,7 @@ def start(id, num_detections, detection_queue, event):

|

|||||||

frame_times = []

|

frame_times = []

|

||||||

for x in range(0, num_detections):

|

for x in range(0, num_detections):

|

||||||

start_frame = datetime.datetime.now().timestamp()

|

start_frame = datetime.datetime.now().timestamp()

|

||||||

detections = object_detector.detect(my_frame)

|

object_detector.detect(my_frame)

|

||||||

frame_times.append(datetime.datetime.now().timestamp() - start_frame)

|

frame_times.append(datetime.datetime.now().timestamp() - start_frame)

|

||||||

|

|

||||||

duration = datetime.datetime.now().timestamp() - start

|

duration = datetime.datetime.now().timestamp() - start

|

||||||

|

|||||||

@ -12,13 +12,15 @@ apt-get -qq install --no-install-recommends -y \

|

|||||||

unzip locales tzdata libxml2 xz-utils \

|

unzip locales tzdata libxml2 xz-utils \

|

||||||

python3-pip \

|

python3-pip \

|

||||||

curl \

|

curl \

|

||||||

jq

|

jq \

|

||||||

|

nethogs

|

||||||

|

|

||||||

mkdir -p -m 600 /root/.gnupg

|

mkdir -p -m 600 /root/.gnupg

|

||||||

|

|

||||||

# add coral repo

|

# add coral repo

|

||||||

wget --quiet -O /usr/share/keyrings/google-edgetpu.gpg https://packages.cloud.google.com/apt/doc/apt-key.gpg

|

curl -fsSLo - https://packages.cloud.google.com/apt/doc/apt-key.gpg | \

|

||||||

echo "deb [signed-by=/usr/share/keyrings/google-edgetpu.gpg] https://packages.cloud.google.com/apt coral-edgetpu-stable main" | tee /etc/apt/sources.list.d/coral-edgetpu.list

|

gpg --dearmor -o /etc/apt/trusted.gpg.d/google-cloud-packages-archive-keyring.gpg

|

||||||

|

echo "deb https://packages.cloud.google.com/apt coral-edgetpu-stable main" | tee /etc/apt/sources.list.d/coral-edgetpu.list

|

||||||

echo "libedgetpu1-max libedgetpu/accepted-eula select true" | debconf-set-selections

|

echo "libedgetpu1-max libedgetpu/accepted-eula select true" | debconf-set-selections

|

||||||

|

|

||||||

# enable non-free repo

|

# enable non-free repo

|

||||||

|

|||||||

@ -3,11 +3,14 @@

|

|||||||

import json

|

import json

|

||||||

import os

|

import os

|

||||||

import sys

|

import sys

|

||||||

|

|

||||||

import yaml

|

import yaml

|

||||||

|

|

||||||

sys.path.insert(0, "/opt/frigate")

|

sys.path.insert(0, "/opt/frigate")

|

||||||

from frigate.const import BIRDSEYE_PIPE, BTBN_PATH

|

from frigate.const import BIRDSEYE_PIPE, BTBN_PATH # noqa: E402

|

||||||

from frigate.ffmpeg_presets import parse_preset_hardware_acceleration_encode

|

from frigate.ffmpeg_presets import ( # noqa: E402

|

||||||

|

parse_preset_hardware_acceleration_encode,

|

||||||

|

)

|

||||||

|

|

||||||

sys.path.remove("/opt/frigate")

|

sys.path.remove("/opt/frigate")

|

||||||

|

|

||||||

|

|||||||

@ -2,4 +2,4 @@

|

|||||||

|

|

||||||

This website is built using [Docusaurus 2](https://v2.docusaurus.io/), a modern static website generator.

|

This website is built using [Docusaurus 2](https://v2.docusaurus.io/), a modern static website generator.

|

||||||

|

|

||||||

For installation and contributing instructions, please follow the [Contributing Docs](https://blakeblackshear.github.io/frigate/contributing).

|

For installation and contributing instructions, please follow the [Contributing Docs](https://docs.frigate.video/development/contributing).

|

||||||

|

|||||||

@ -107,3 +107,14 @@ To do this:

|

|||||||

3. Restart Frigate and the custom version will be used if the mapping was done correctly.

|

3. Restart Frigate and the custom version will be used if the mapping was done correctly.

|

||||||

|

|

||||||

NOTE: The folder that is mapped from the host needs to be the folder that contains `/bin`. So if the full structure is `/home/appdata/frigate/custom-ffmpeg/bin/ffmpeg` then `/home/appdata/frigate/custom-ffmpeg` needs to be mapped to `/usr/lib/btbn-ffmpeg`.

|

NOTE: The folder that is mapped from the host needs to be the folder that contains `/bin`. So if the full structure is `/home/appdata/frigate/custom-ffmpeg/bin/ffmpeg` then `/home/appdata/frigate/custom-ffmpeg` needs to be mapped to `/usr/lib/btbn-ffmpeg`.

|

||||||

|

|

||||||

|

## Custom go2rtc version

|

||||||

|

|

||||||

|

Frigate currently includes go2rtc v1.5.0, there may be certain cases where you want to run a different version of go2rtc.

|

||||||

|

|

||||||

|

To do this:

|

||||||

|

|

||||||

|

1. Download the go2rtc build to the /config folder.

|

||||||

|

2. Rename the build to `go2rtc`.

|

||||||

|

3. Give `go2rtc` execute permission.

|

||||||

|

4. Restart Frigate and the custom version will be used, you can verify by checking go2rtc logs.

|

||||||

|

|||||||

@ -141,7 +141,7 @@ go2rtc:

|

|||||||

- rtspx://192.168.1.1:7441/abcdefghijk

|

- rtspx://192.168.1.1:7441/abcdefghijk

|

||||||

```

|

```

|

||||||

|

|

||||||

[See the go2rtc docs for more information](https://github.com/AlexxIT/go2rtc/tree/v1.2.0#source-rtsp)

|

[See the go2rtc docs for more information](https://github.com/AlexxIT/go2rtc/tree/v1.5.0#source-rtsp)

|

||||||

|

|

||||||

In the Unifi 2.0 update Unifi Protect Cameras had a change in audio sample rate which causes issues for ffmpeg. The input rate needs to be set for record and rtmp if used directly with unifi protect.

|

In the Unifi 2.0 update Unifi Protect Cameras had a change in audio sample rate which causes issues for ffmpeg. The input rate needs to be set for record and rtmp if used directly with unifi protect.

|

||||||

|

|

||||||

|

|||||||

@ -198,7 +198,7 @@ To generate model files, create a new folder to save the models, download the sc

|

|||||||

|

|

||||||

```bash

|

```bash

|

||||||

mkdir trt-models

|

mkdir trt-models

|

||||||

wget https://raw.githubusercontent.com/blakeblackshear/frigate/docker/tensorrt_models.sh

|

wget https://github.com/blakeblackshear/frigate/raw/master/docker/tensorrt_models.sh

|

||||||

chmod +x tensorrt_models.sh

|

chmod +x tensorrt_models.sh

|

||||||

docker run --gpus=all --rm -it -v `pwd`/trt-models:/tensorrt_models -v `pwd`/tensorrt_models.sh:/tensorrt_models.sh nvcr.io/nvidia/tensorrt:22.07-py3 /tensorrt_models.sh

|

docker run --gpus=all --rm -it -v `pwd`/trt-models:/tensorrt_models -v `pwd`/tensorrt_models.sh:/tensorrt_models.sh nvcr.io/nvidia/tensorrt:22.07-py3 /tensorrt_models.sh

|

||||||

```

|

```

|

||||||

|

|||||||

@ -15,7 +15,23 @@ ffmpeg:

|

|||||||

hwaccel_args: preset-rpi-64-h264

|

hwaccel_args: preset-rpi-64-h264

|

||||||

```

|

```

|

||||||

|

|

||||||

### Intel-based CPUs (<10th Generation) via VAAPI

|

:::note

|

||||||

|

|

||||||

|

If running Frigate in docker, you either need to run in priviliged mode or be sure to map the /dev/video1x devices to Frigate

|

||||||

|

|

||||||

|

```yaml

|

||||||

|

docker run -d \

|

||||||

|

--name frigate \

|

||||||

|

...

|

||||||

|

--device /dev/video10 \

|

||||||

|

ghcr.io/blakeblackshear/frigate:stable

|

||||||

|

```

|

||||||

|

|

||||||

|

:::

|

||||||

|

|

||||||

|

### Intel-based CPUs

|

||||||

|

|

||||||

|

#### Via VAAPI

|

||||||

|

|

||||||

VAAPI supports automatic profile selection so it will work automatically with both H.264 and H.265 streams. VAAPI is recommended for all generations of Intel-based CPUs if QSV does not work.

|

VAAPI supports automatic profile selection so it will work automatically with both H.264 and H.265 streams. VAAPI is recommended for all generations of Intel-based CPUs if QSV does not work.

|

||||||

|

|

||||||

@ -26,24 +42,89 @@ ffmpeg:

|

|||||||

|

|

||||||

**NOTICE**: With some of the processors, like the J4125, the default driver `iHD` doesn't seem to work correctly for hardware acceleration. You may need to change the driver to `i965` by adding the following environment variable `LIBVA_DRIVER_NAME=i965` to your docker-compose file or [in the `frigate.yaml` for HA OS users](advanced.md#environment_vars).

|

**NOTICE**: With some of the processors, like the J4125, the default driver `iHD` doesn't seem to work correctly for hardware acceleration. You may need to change the driver to `i965` by adding the following environment variable `LIBVA_DRIVER_NAME=i965` to your docker-compose file or [in the `frigate.yaml` for HA OS users](advanced.md#environment_vars).

|

||||||

|

|

||||||

### Intel-based CPUs (>=10th Generation) via Quicksync

|

#### Via Quicksync (>=10th Generation only)

|

||||||

|

|

||||||

QSV must be set specifically based on the video encoding of the stream.

|

QSV must be set specifically based on the video encoding of the stream.

|

||||||

|

|

||||||

#### H.264 streams

|

##### H.264 streams

|

||||||

|

|

||||||

```yaml

|

```yaml

|

||||||

ffmpeg:

|

ffmpeg:

|

||||||

hwaccel_args: preset-intel-qsv-h264

|

hwaccel_args: preset-intel-qsv-h264

|

||||||

```

|

```

|

||||||

|

|

||||||

#### H.265 streams

|

##### H.265 streams

|

||||||

|

|

||||||

```yaml

|

```yaml

|

||||||

ffmpeg:

|

ffmpeg:

|

||||||

hwaccel_args: preset-intel-qsv-h265

|

hwaccel_args: preset-intel-qsv-h265

|

||||||

```

|

```

|

||||||

|

|

||||||

|

#### Configuring Intel GPU Stats in Docker

|

||||||

|

|

||||||

|

Additional configuration is needed for the Docker container to be able to access the `intel_gpu_top` command for GPU stats. Three possible changes can be made:

|

||||||

|

|

||||||

|

1. Run the container as privileged.

|

||||||

|

2. Adding the `CAP_PERFMON` capability.

|

||||||

|

3. Setting the `perf_event_paranoid` low enough to allow access to the performance event system.

|

||||||

|

|

||||||

|

##### Run as privileged

|

||||||

|

|

||||||

|

This method works, but it gives more permissions to the container than are actually needed.

|

||||||

|

|

||||||

|

###### Docker Compose - Privileged

|

||||||

|

|

||||||

|

```yaml

|

||||||

|

services:

|

||||||

|

frigate:

|

||||||

|

...

|

||||||

|

image: ghcr.io/blakeblackshear/frigate:stable

|

||||||

|

privileged: true

|

||||||

|

```

|

||||||

|

|

||||||

|

###### Docker Run CLI - Privileged

|

||||||

|

|

||||||

|

```bash

|

||||||

|

docker run -d \

|

||||||

|

--name frigate \

|

||||||

|

...

|

||||||

|

--privileged \

|

||||||

|

ghcr.io/blakeblackshear/frigate:stable

|

||||||

|

```

|

||||||

|

|

||||||

|

##### CAP_PERFMON

|

||||||

|

|

||||||

|

Only recent versions of Docker support the `CAP_PERFMON` capability. You can test to see if yours supports it by running: `docker run --cap-add=CAP_PERFMON hello-world`

|

||||||

|

|

||||||

|

###### Docker Compose - CAP_PERFMON

|

||||||

|

|

||||||

|

```yaml

|

||||||

|

services:

|

||||||

|

frigate:

|

||||||

|

...

|

||||||

|

image: ghcr.io/blakeblackshear/frigate:stable

|

||||||

|

cap_add:

|

||||||

|

- CAP_PERFMON

|

||||||

|

```

|

||||||

|

|

||||||

|

###### Docker Run CLI - CAP_PERFMON

|

||||||

|

|

||||||

|

```bash

|

||||||

|

docker run -d \

|

||||||

|

--name frigate \

|

||||||

|

...

|

||||||

|

--cap-add=CAP_PERFMON \

|

||||||

|

ghcr.io/blakeblackshear/frigate:stable

|

||||||

|

```

|

||||||

|

|

||||||

|

##### perf_event_paranoid

|

||||||

|

|

||||||

|

_Note: This setting must be changed for the entire system._

|

||||||

|

|

||||||

|

For more information on the various values across different distributions, see https://askubuntu.com/questions/1400874/what-does-perf-paranoia-level-four-do.

|

||||||

|

|

||||||

|

Depending on your OS and kernel configuration, you may need to change the `/proc/sys/kernel/perf_event_paranoid` kernel tunable. You can test the change by running `sudo sh -c 'echo 2 >/proc/sys/kernel/perf_event_paranoid'` which will persist until a reboot. Make it permanent by running `sudo sh -c 'echo kernel.perf_event_paranoid=1 >> /etc/sysctl.d/local.conf'`

|

||||||

|

|

||||||

### AMD/ATI GPUs (Radeon HD 2000 and newer GPUs) via libva-mesa-driver

|

### AMD/ATI GPUs (Radeon HD 2000 and newer GPUs) via libva-mesa-driver

|

||||||

|

|

||||||

VAAPI supports automatic profile selection so it will work automatically with both H.264 and H.265 streams.

|

VAAPI supports automatic profile selection so it will work automatically with both H.264 and H.265 streams.

|

||||||

@ -59,15 +140,15 @@ ffmpeg:

|

|||||||

|

|

||||||

While older GPUs may work, it is recommended to use modern, supported GPUs. NVIDIA provides a [matrix of supported GPUs and features](https://developer.nvidia.com/video-encode-and-decode-gpu-support-matrix-new). If your card is on the list and supports CUVID/NVDEC, it will most likely work with Frigate for decoding. However, you must also use [a driver version that will work with FFmpeg](https://github.com/FFmpeg/nv-codec-headers/blob/master/README). Older driver versions may be missing symbols and fail to work, and older cards are not supported by newer driver versions. The only way around this is to [provide your own FFmpeg](/configuration/advanced#custom-ffmpeg-build) that will work with your driver version, but this is unsupported and may not work well if at all.

|

While older GPUs may work, it is recommended to use modern, supported GPUs. NVIDIA provides a [matrix of supported GPUs and features](https://developer.nvidia.com/video-encode-and-decode-gpu-support-matrix-new). If your card is on the list and supports CUVID/NVDEC, it will most likely work with Frigate for decoding. However, you must also use [a driver version that will work with FFmpeg](https://github.com/FFmpeg/nv-codec-headers/blob/master/README). Older driver versions may be missing symbols and fail to work, and older cards are not supported by newer driver versions. The only way around this is to [provide your own FFmpeg](/configuration/advanced#custom-ffmpeg-build) that will work with your driver version, but this is unsupported and may not work well if at all.

|

||||||

|

|

||||||

A more complete list of cards and ther compatible drivers is available in the [driver release readme](https://download.nvidia.com/XFree86/Linux-x86_64/525.85.05/README/supportedchips.html).

|

A more complete list of cards and their compatible drivers is available in the [driver release readme](https://download.nvidia.com/XFree86/Linux-x86_64/525.85.05/README/supportedchips.html).

|

||||||

|

|

||||||

If your distribution does not offer NVIDIA driver packages, you can [download them here](https://www.nvidia.com/en-us/drivers/unix/).

|

If your distribution does not offer NVIDIA driver packages, you can [download them here](https://www.nvidia.com/en-us/drivers/unix/).

|

||||||

|

|

||||||

#### Docker Configuration

|

#### Configuring Nvidia GPUs in Docker

|

||||||

|

|

||||||

Additional configuration is needed for the Docker container to be able to access the NVIDIA GPU. The supported method for this is to install the [NVIDIA Container Toolkit](https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/install-guide.html#docker) and specify the GPU to Docker. How you do this depends on how Docker is being run:

|

Additional configuration is needed for the Docker container to be able to access the NVIDIA GPU. The supported method for this is to install the [NVIDIA Container Toolkit](https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/install-guide.html#docker) and specify the GPU to Docker. How you do this depends on how Docker is being run:

|

||||||

|

|

||||||

##### Docker Compose

|

##### Docker Compose - Nvidia GPU

|

||||||

|

|

||||||

```yaml

|

```yaml

|

||||||

services:

|

services:

|

||||||

@ -84,7 +165,7 @@ services:

|

|||||||

capabilities: [gpu]

|

capabilities: [gpu]

|

||||||

```

|

```

|

||||||

|

|

||||||

##### Docker Run CLI

|

##### Docker Run CLI - Nvidia GPU

|

||||||

|

|

||||||

```bash

|

```bash

|

||||||

docker run -d \

|

docker run -d \

|

||||||

|

|||||||

@ -377,7 +377,7 @@ rtmp:

|

|||||||

enabled: False

|

enabled: False

|

||||||

|

|

||||||

# Optional: Restream configuration

|

# Optional: Restream configuration

|

||||||

# Uses https://github.com/AlexxIT/go2rtc (v1.2.0)

|

# Uses https://github.com/AlexxIT/go2rtc (v1.5.0)

|

||||||

go2rtc:

|

go2rtc:

|

||||||

|

|

||||||

# Optional: jsmpeg stream configuration for WebUI

|

# Optional: jsmpeg stream configuration for WebUI

|

||||||

|

|||||||

@ -78,6 +78,8 @@ WebRTC works by creating a TCP or UDP connection on port `8555`. However, it req

|

|||||||

- 192.168.1.10:8555

|

- 192.168.1.10:8555

|

||||||

- stun:8555

|

- stun:8555

|

||||||

```

|

```

|

||||||

|

|

||||||

|

- For access through Tailscale, the Frigate system's Tailscale IP must be added as a WebRTC candidate. Tailscale IPs all start with `100.`, and are reserved within the `100.0.0.0/8` CIDR block.

|

||||||

|

|

||||||

:::tip

|

:::tip

|

||||||

|

|

||||||

@ -97,8 +99,20 @@ However, it is recommended if issues occur to define the candidates manually. Yo

|

|||||||

If you are having difficulties getting WebRTC to work and you are running Frigate with docker, you may want to try changing the container network mode:

|

If you are having difficulties getting WebRTC to work and you are running Frigate with docker, you may want to try changing the container network mode:

|

||||||

|

|

||||||

- `network: host`, in this mode you don't need to forward any ports. The services inside of the Frigate container will have full access to the network interfaces of your host machine as if they were running natively and not in a container. Any port conflicts will need to be resolved. This network mode is recommended by go2rtc, but we recommend you only use it if necessary.

|

- `network: host`, in this mode you don't need to forward any ports. The services inside of the Frigate container will have full access to the network interfaces of your host machine as if they were running natively and not in a container. Any port conflicts will need to be resolved. This network mode is recommended by go2rtc, but we recommend you only use it if necessary.

|

||||||

- `network: bridge` creates a virtual network interface for the container, and the container will have full access to it. You also don't need to forward any ports, however, the IP for accessing Frigate locally will differ from the IP of the host machine. Your router will see Frigate as if it was a new device connected in the network.

|

- `network: bridge` is the default network driver, a bridge network is a Link Layer device which forwards traffic between network segments. You need to forward any ports that you want to be accessible from the host IP.

|

||||||

|

|

||||||

|

If not running in host mode, port 8555 will need to be mapped for the container:

|

||||||

|

|

||||||

|

docker-compose.yml

|

||||||

|

```yaml

|

||||||

|

services:

|

||||||

|

frigate:

|

||||||

|

...

|

||||||

|

ports:

|

||||||

|

- "8555:8555/tcp" # WebRTC over tcp

|

||||||

|

- "8555:8555/udp" # WebRTC over udp

|

||||||

|

```

|

||||||

|

|

||||||

:::

|

:::

|

||||||

|

|

||||||

See [go2rtc WebRTC docs](https://github.com/AlexxIT/go2rtc/tree/v1.2.0#module-webrtc) for more information about this.

|

See [go2rtc WebRTC docs](https://github.com/AlexxIT/go2rtc/tree/v1.5.0#module-webrtc) for more information about this.

|

||||||

|

|||||||

@ -7,7 +7,7 @@ title: Restream

|

|||||||

|

|

||||||

Frigate can restream your video feed as an RTSP feed for other applications such as Home Assistant to utilize it at `rtsp://<frigate_host>:8554/<camera_name>`. Port 8554 must be open. [This allows you to use a video feed for detection in Frigate and Home Assistant live view at the same time without having to make two separate connections to the camera](#reduce-connections-to-camera). The video feed is copied from the original video feed directly to avoid re-encoding. This feed does not include any annotation by Frigate.

|

Frigate can restream your video feed as an RTSP feed for other applications such as Home Assistant to utilize it at `rtsp://<frigate_host>:8554/<camera_name>`. Port 8554 must be open. [This allows you to use a video feed for detection in Frigate and Home Assistant live view at the same time without having to make two separate connections to the camera](#reduce-connections-to-camera). The video feed is copied from the original video feed directly to avoid re-encoding. This feed does not include any annotation by Frigate.

|

||||||

|

|

||||||

Frigate uses [go2rtc](https://github.com/AlexxIT/go2rtc/tree/v1.2.0) to provide its restream and MSE/WebRTC capabilities. The go2rtc config is hosted at the `go2rtc` in the config, see [go2rtc docs](https://github.com/AlexxIT/go2rtc/tree/v1.2.0#configuration) for more advanced configurations and features.

|

Frigate uses [go2rtc](https://github.com/AlexxIT/go2rtc/tree/v1.5.0) to provide its restream and MSE/WebRTC capabilities. The go2rtc config is hosted at the `go2rtc` in the config, see [go2rtc docs](https://github.com/AlexxIT/go2rtc/tree/v1.5.0#configuration) for more advanced configurations and features.

|

||||||

|

|

||||||

:::note

|

:::note

|

||||||

|

|

||||||

@ -86,7 +86,7 @@ Two connections are made to the camera. One for the sub stream, one for the rest

|

|||||||

```yaml

|

```yaml

|

||||||

go2rtc:

|

go2rtc:

|

||||||

streams:

|

streams:

|

||||||

rtsp_cam:

|

rtsp_cam:

|

||||||

- rtsp://192.168.1.5:554/live0 # <- stream which supports video & aac audio. This is only supported for rtsp streams, http must use ffmpeg

|

- rtsp://192.168.1.5:554/live0 # <- stream which supports video & aac audio. This is only supported for rtsp streams, http must use ffmpeg

|

||||||

- "ffmpeg:rtsp_cam#audio=opus" # <- copy of the stream which transcodes audio to opus

|

- "ffmpeg:rtsp_cam#audio=opus" # <- copy of the stream which transcodes audio to opus

|

||||||

rtsp_cam_sub:

|

rtsp_cam_sub:

|

||||||

@ -130,7 +130,7 @@ cameras:

|

|||||||

|

|

||||||

## Advanced Restream Configurations

|

## Advanced Restream Configurations

|

||||||

|

|

||||||

The [exec](https://github.com/AlexxIT/go2rtc/tree/v1.2.0#source-exec) source in go2rtc can be used for custom ffmpeg commands. An example is below:

|

The [exec](https://github.com/AlexxIT/go2rtc/tree/v1.5.0#source-exec) source in go2rtc can be used for custom ffmpeg commands. An example is below:

|

||||||

|

|

||||||

NOTE: The output will need to be passed with two curly braces `{{output}}`

|

NOTE: The output will need to be passed with two curly braces `{{output}}`

|

||||||

|

|

||||||

|

|||||||

@ -4,3 +4,5 @@ title: Snapshots

|

|||||||

---

|

---

|

||||||

|

|

||||||

Frigate can save a snapshot image to `/media/frigate/clips` for each event named as `<camera>-<id>.jpg`.

|

Frigate can save a snapshot image to `/media/frigate/clips` for each event named as `<camera>-<id>.jpg`.

|

||||||

|

|

||||||

|

Snapshots sent via MQTT are configured in the [config file](https://docs.frigate.video/configuration/) under `cameras -> your_camera -> mqtt`

|

||||||

|

|||||||

@ -36,7 +36,13 @@ Fork [blakeblackshear/frigate-hass-integration](https://github.com/blakeblackshe

|

|||||||

- [Frigate source code](#frigate-core-web-and-docs)

|

- [Frigate source code](#frigate-core-web-and-docs)

|

||||||

- GNU make

|

- GNU make

|

||||||

- Docker

|

- Docker

|

||||||

- Extra Coral device (optional, but very helpful to simulate real world performance)

|

- An extra detector (Coral, OpenVINO, etc.) is optional but recommended to simulate real world performance.

|

||||||

|

|

||||||

|

:::note

|

||||||

|

|

||||||

|

A Coral device can only be used by a single process at a time, so an extra Coral device is recommended if using a coral for development purposes.

|

||||||

|

|

||||||

|

:::

|

||||||

|

|

||||||

### Setup

|

### Setup

|

||||||

|

|

||||||

@ -79,7 +85,7 @@ Create and place these files in a `debug` folder in the root of the repo. This i

|

|||||||

VSCode will start the docker compose file for you and open a terminal window connected to `frigate-dev`.

|

VSCode will start the docker compose file for you and open a terminal window connected to `frigate-dev`.

|

||||||

|

|

||||||

- Run `python3 -m frigate` to start the backend.

|

- Run `python3 -m frigate` to start the backend.

|

||||||

- In a separate terminal window inside VS Code, change into the `web` directory and run `npm install && npm start` to start the frontend.

|

- In a separate terminal window inside VS Code, change into the `web` directory and run `npm install && npm run dev` to start the frontend.

|

||||||

|

|

||||||

#### 5. Teardown

|

#### 5. Teardown

|

||||||

|

|

||||||

|

|||||||

@ -211,3 +211,109 @@ It is recommended to run Frigate in LXC for maximum performance. See [this discu

|

|||||||

## ESX

|

## ESX

|

||||||

|

|

||||||

For details on running Frigate under ESX, see details [here](https://github.com/blakeblackshear/frigate/issues/305).

|

For details on running Frigate under ESX, see details [here](https://github.com/blakeblackshear/frigate/issues/305).

|

||||||

|

|

||||||

|

## Synology NAS on DSM 7

|

||||||

|

|

||||||

|

These settings were tested on DSM 7.1.1-42962 Update 4

|

||||||

|

|

||||||

|

|

||||||

|

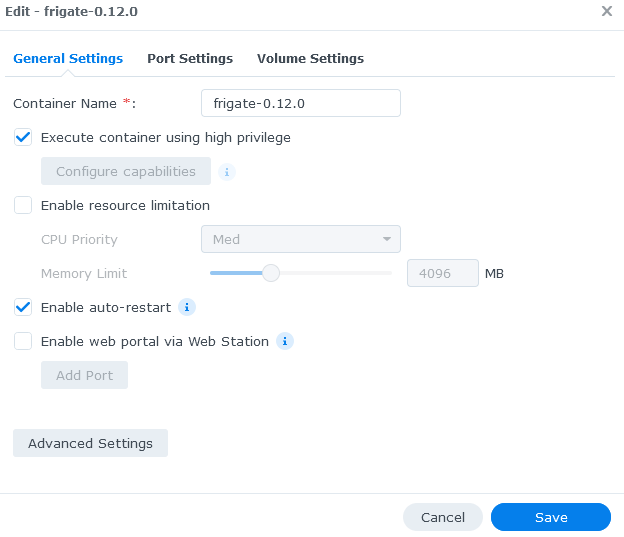

**General:**

|

||||||

|

|

||||||

|

The `Execute container using high privilege` option needs to be enabled in order to give the frigate container the elevated privileges it may need.

|

||||||

|

|

||||||

|

The `Enable auto-restart` option can be enabled if you want the container to automatically restart whenever it improperly shuts down due to an error.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

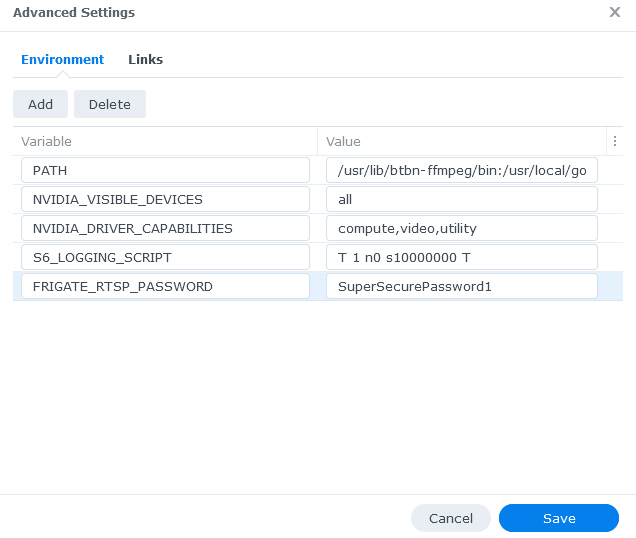

**Advanced Settings:**

|

||||||

|

|

||||||

|

If you want to use the password template feature, you should add the "FRIGATE_RTSP_PASSWORD" environment variable and set it to your preferred password under advanced settings. The rest of the environment variables should be left as default for now.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

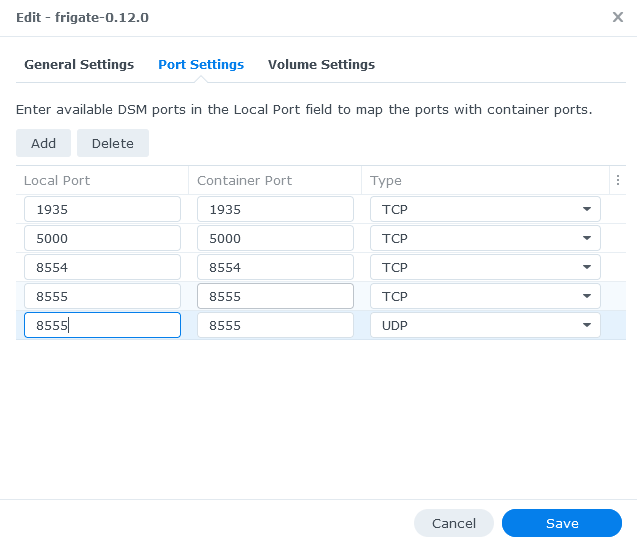

**Port Settings:**

|

||||||

|

|

||||||

|

The network mode should be set to `bridge`. You need to map the default frigate container ports to your local Synology NAS ports that you want to use to access Frigate.

|

||||||

|

|

||||||

|

There may be other services running on your NAS that are using the same ports that frigate uses. In that instance you can set the ports to auto or a specific port.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

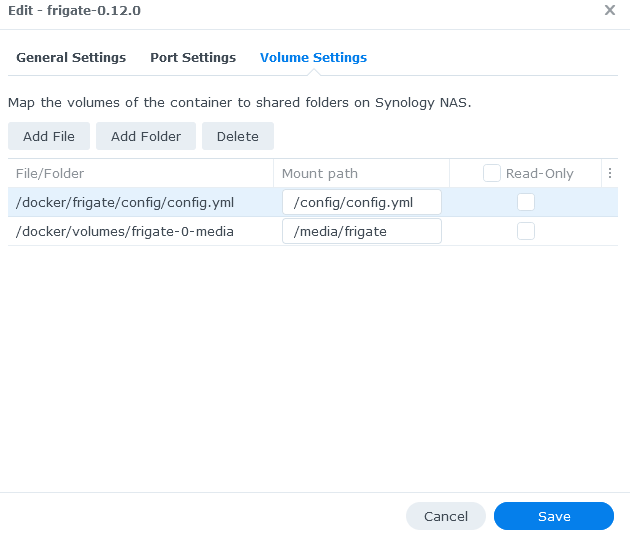

**Volume Settings:**

|

||||||

|

|

||||||

|

You need to configure 2 paths:

|

||||||

|

|

||||||

|

- The location of your config file in yaml format, this needs to be file and you need to go to the location of where your config.yml is located, this will be different depending on your NAS folder structure e.g. `/docker/frigate/config/config.yml` will mount to `/config/config.yml` within the container.

|

||||||

|

- The location on your NAS where the recordings will be saved this needs to be a folder e.g. `/docker/volumes/frigate-0-media`

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

## QNAP NAS

|

||||||

|

|

||||||

|

These instructions were tested on a QNAP with an Intel J3455 CPU and 16G RAM, running QTS 4.5.4.2117.

|

||||||

|

|

||||||

|

QNAP has a graphic tool named Container Station to intall and manage docker containers. However, there are two limitations with Container Station that make it unsuitable to install Frigate:

|

||||||

|

|

||||||

|

1. Container Station does not incorporate GitHub Container Registry (ghcr), which hosts Frigate docker image version 0.12.0 and above.

|

||||||

|

2. Container Station uses default 64 Mb shared memory size (shm-size), and does not have a mechanism to adjust it. Frigate requires a larger shm-size to be able to work properly with more than two high resolution cameras.

|

||||||

|

|

||||||

|

Because of above limitations, the installation has to be done from command line. Here are the steps:

|

||||||

|

|

||||||

|

**Preparation**

|

||||||

|

1. Install Container Station from QNAP App Center if it is not installed.

|

||||||

|

2. Enable ssh on your QNAP (please do an Internet search on how to do this).

|

||||||

|

3. Prepare Frigate config file, name it `config.yml`.

|

||||||

|

4. Calculate shared memory size according to [documentation](https://docs.frigate.video/frigate/installation).

|

||||||

|

5. Find your time zone value from https://en.wikipedia.org/wiki/List_of_tz_database_time_zones

|

||||||

|

6. ssh to QNAP.

|

||||||

|

|

||||||

|

**Installation**

|

||||||

|

|

||||||

|

Run the following commands to install Frigate (using `stable` version as example):

|

||||||

|

```bash

|

||||||

|

# Download Frigate image

|

||||||

|

docker pull ghcr.io/blakeblackshear/frigate:stable

|

||||||

|

# Create directory to host Frigate config file on QNAP file system.

|

||||||

|

# E.g., you can choose to create it under /share/Container.

|

||||||

|

mkdir -p /share/Container/frigate/config

|

||||||

|

# Copy the config file prepared in step 2 into the newly created config directory.

|

||||||

|

cp path/to/your/config/file /share/Container/frigate/config

|

||||||

|

# Create directory to host Frigate media files on QNAP file system.

|

||||||

|

# (if you have a surveilliance disk, create media directory on the surveilliance disk.

|

||||||

|

# Example command assumes share_vol2 is the surveilliance drive

|

||||||

|

mkdir -p /share/share_vol2/frigate/media

|

||||||

|

# Create Frigate docker container. Replace shm-size value with the value from preparation step 3.

|

||||||

|

# Also replace the time zone value for 'TZ' in the sample command.

|

||||||

|

# Example command will create a docker container that uses at most 2 CPUs and 4G RAM.

|

||||||

|

# You may need to add "--env=LIBVA_DRIVER_NAME=i965 \" to the following docker run command if you

|

||||||

|

# have certain CPU (e.g., J4125). See https://docs.frigate.video/configuration/hardware_acceleration.

|

||||||

|

docker run \

|

||||||

|

--name=frigate \

|

||||||

|

--shm-size=256m \

|

||||||

|

--restart=unless-stopped \

|

||||||

|

--env=TZ=America/New_York \

|

||||||

|

--volume=/share/Container/frigate/config:/config:rw \

|

||||||

|

--volume=/share/share_vol2/frigate/media:/media/frigate:rw \

|

||||||

|

--network=bridge \

|

||||||

|

--privileged \

|

||||||

|

--workdir=/opt/frigate \

|

||||||

|

-p 1935:1935 \

|

||||||

|

-p 5000:5000 \

|

||||||

|

-p 8554:8554 \

|

||||||

|

-p 8555:8555 \

|

||||||

|

-p 8555:8555/udp \

|

||||||

|

--label='com.qnap.qcs.network.mode=nat' \

|

||||||

|

--label='com.qnap.qcs.gpu=False' \

|

||||||

|

--memory="4g" \

|

||||||

|

--cpus="2" \

|

||||||

|

--detach=true \

|

||||||

|

-t \

|

||||||

|

ghcr.io/blakeblackshear/frigate:stable

|

||||||

|

```

|

||||||

|

|

||||||

|

Log into QNAP, open Container Station. Frigate docker container should be listed under 'Overview' and running. Visit Frigate Web UI by clicking Frigate docker, and then clicking the URL shown at the top of the detail page.

|

||||||

|

|

||||||

|

|

||||||

|

|||||||

@ -10,7 +10,7 @@ Use of the bundled go2rtc is optional. You can still configure FFmpeg to connect

|

|||||||

|

|

||||||

# Setup a go2rtc stream

|

# Setup a go2rtc stream

|

||||||

|

|

||||||

First, you will want to configure go2rtc to connect to your camera stream by adding the stream you want to use for live view in your Frigate config file. If you set the stream name under go2rtc to match the name of your camera, it will automatically be mapped and you will get additional live view options for the camera. Avoid changing any other parts of your config at this step. Note that go2rtc supports [many different stream types](https://github.com/AlexxIT/go2rtc/tree/v1.2.0#module-streams), not just rtsp.

|

First, you will want to configure go2rtc to connect to your camera stream by adding the stream you want to use for live view in your Frigate config file. If you set the stream name under go2rtc to match the name of your camera, it will automatically be mapped and you will get additional live view options for the camera. Avoid changing any other parts of your config at this step. Note that go2rtc supports [many different stream types](https://github.com/AlexxIT/go2rtc/tree/v1.5.0#module-streams), not just rtsp.

|

||||||

|

|

||||||

```yaml

|

```yaml

|

||||||

go2rtc:

|

go2rtc:

|

||||||

@ -23,7 +23,7 @@ The easiest live view to get working is MSE. After adding this to the config, re

|

|||||||

|

|

||||||

### What if my video doesn't play?

|

### What if my video doesn't play?

|

||||||

|

|

||||||

If you are unable to see your video feed, first check the go2rtc logs in the Frigate UI under Logs in the sidebar. If go2rtc is having difficulty connecting to your camera, you should see some error messages in the log. If you do not see any errors, then the video codec of the stream may not be supported in your browser. If your camera stream is set to H265, try switching to H264. You can see more information about [video codec compatibility](https://github.com/AlexxIT/go2rtc/tree/v1.2.0#codecs-madness) in the go2rtc documentation. If you are not able to switch your camera settings from H265 to H264 or your stream is a different format such as MJPEG, you can use go2rtc to re-encode the video using the [FFmpeg parameters](https://github.com/AlexxIT/go2rtc/tree/v1.2.0#source-ffmpeg). It supports rotating and resizing video feeds and hardware acceleration. Keep in mind that transcoding video from one format to another is a resource intensive task and you may be better off using the built-in jsmpeg view. Here is an example of a config that will re-encode the stream to H264 without hardware acceleration:

|

If you are unable to see your video feed, first check the go2rtc logs in the Frigate UI under Logs in the sidebar. If go2rtc is having difficulty connecting to your camera, you should see some error messages in the log. If you do not see any errors, then the video codec of the stream may not be supported in your browser. If your camera stream is set to H265, try switching to H264. You can see more information about [video codec compatibility](https://github.com/AlexxIT/go2rtc/tree/v1.5.0#codecs-madness) in the go2rtc documentation. If you are not able to switch your camera settings from H265 to H264 or your stream is a different format such as MJPEG, you can use go2rtc to re-encode the video using the [FFmpeg parameters](https://github.com/AlexxIT/go2rtc/tree/v1.5.0#source-ffmpeg). It supports rotating and resizing video feeds and hardware acceleration. Keep in mind that transcoding video from one format to another is a resource intensive task and you may be better off using the built-in jsmpeg view. Here is an example of a config that will re-encode the stream to H264 without hardware acceleration:

|

||||||

|

|

||||||

```yaml

|

```yaml

|

||||||

go2rtc:

|

go2rtc:

|

||||||

@ -71,6 +71,12 @@ go2rtc:

|

|||||||

- "ffmpeg:rtsp://user:password@10.0.10.10:554/cam/realmonitor?channel=1&subtype=2#video=copy#audio=copy#audio=aac"

|

- "ffmpeg:rtsp://user:password@10.0.10.10:554/cam/realmonitor?channel=1&subtype=2#video=copy#audio=copy#audio=aac"

|

||||||

```

|

```

|

||||||

|

|

||||||

|

:::caution

|

||||||

|

|

||||||

|

To access the go2rtc stream externally when utilizing the Frigate Add-On (for instance through VLC), you must first enable the RTSP Restream port. You can do this by visiting the Frigate Add-On configuration page within Home Assistant and revealing the hidden options under the "Show disabled ports" section.

|

||||||

|

|

||||||

|

:::

|

||||||

|

|

||||||

## Next steps

|

## Next steps

|

||||||

|

|

||||||

1. If the stream you added to go2rtc is also used by Frigate for the `record` or `detect` role, you can migrate your config to pull from the RTSP restream to reduce the number of connections to your camera as shown [here](/configuration/restream#reduce-connections-to-camera).

|

1. If the stream you added to go2rtc is also used by Frigate for the `record` or `detect` role, you can migrate your config to pull from the RTSP restream to reduce the number of connections to your camera as shown [here](/configuration/restream#reduce-connections-to-camera).

|

||||||

|

|||||||

@ -14,7 +14,7 @@ mqtt:

|

|||||||

enabled: False

|

enabled: False

|

||||||

|

|

||||||

cameras:

|

cameras:

|

||||||

camera_1: # <------ Name the camera

|

name_of_your_camera: # <------ Name the camera

|

||||||

ffmpeg:

|

ffmpeg:

|

||||||

inputs:

|

inputs:

|

||||||

- path: rtsp://10.0.10.10:554/rtsp # <----- The stream you want to use for detection

|

- path: rtsp://10.0.10.10:554/rtsp # <----- The stream you want to use for detection

|

||||||

@ -44,7 +44,7 @@ Here is an example configuration with hardware acceleration configured for Intel

|

|||||||

mqtt: ...

|

mqtt: ...

|

||||||

|

|

||||||

cameras:

|

cameras:

|

||||||

camera_1:

|

name_of_your_camera:

|

||||||

ffmpeg:

|

ffmpeg:

|

||||||

inputs: ...

|

inputs: ...

|

||||||

hwaccel_args: preset-vaapi

|

hwaccel_args: preset-vaapi

|

||||||

@ -64,7 +64,7 @@ detectors: # <---- add detectors

|

|||||||

device: usb

|

device: usb

|

||||||

|

|

||||||

cameras:

|

cameras:

|

||||||

camera_1:

|

name_of_your_camera:

|

||||||

ffmpeg: ...

|

ffmpeg: ...

|

||||||

detect:

|

detect:

|

||||||

enabled: True # <---- turn on detection

|

enabled: True # <---- turn on detection

|

||||||

@ -99,7 +99,7 @@ detectors:

|

|||||||

device: usb

|

device: usb

|

||||||

|

|

||||||

cameras:

|

cameras:

|

||||||

camera_1:

|

name_of_your_camera:

|

||||||

ffmpeg:

|

ffmpeg:

|

||||||

inputs:

|

inputs:

|

||||||

- path: rtsp://10.0.10.10:554/rtsp

|

- path: rtsp://10.0.10.10:554/rtsp

|

||||||

@ -127,7 +127,7 @@ mqtt: ...

|

|||||||

detectors: ...

|

detectors: ...

|

||||||

|

|

||||||

cameras:

|

cameras:

|

||||||

camera_1:

|

name_of_your_camera:

|

||||||

ffmpeg:

|

ffmpeg:

|

||||||

inputs:

|

inputs:

|

||||||

- path: rtsp://10.0.10.10:554/rtsp

|

- path: rtsp://10.0.10.10:554/rtsp

|

||||||

@ -156,7 +156,7 @@ mqtt: ...

|

|||||||

detectors: ...

|

detectors: ...

|

||||||

|

|

||||||

cameras:

|

cameras:

|

||||||

camera_1: ...

|

name_of_your_camera: ...

|

||||||

detect: ...

|

detect: ...

|

||||||

record: ...

|

record: ...

|

||||||

snapshots: # <----- Enable snapshots

|

snapshots: # <----- Enable snapshots

|

||||||

|

|||||||

@ -3,7 +3,7 @@ id: ha_notifications

|

|||||||

title: Home Assistant notifications

|

title: Home Assistant notifications

|

||||||

---

|

---

|

||||||

|

|

||||||

The best way to get started with notifications for Frigate is to use the [Blueprint](https://community.home-assistant.io/t/frigate-mobile-app-notifications/311091). You can use the yaml generated from the Blueprint as a starting point and customize from there.

|

The best way to get started with notifications for Frigate is to use the [Blueprint](https://community.home-assistant.io/t/frigate-mobile-app-notifications-2-0/559732). You can use the yaml generated from the Blueprint as a starting point and customize from there.

|

||||||

|

|

||||||

It is generally recommended to trigger notifications based on the `frigate/events` mqtt topic. This provides the event_id needed to fetch [thumbnails/snapshots/clips](../integrations/home-assistant.md#notification-api) and other useful information to customize when and where you want to receive alerts. The data is published in the form of a change feed, which means you can reference the "previous state" of the object in the `before` section and the "current state" of the object in the `after` section. You can see an example [here](../integrations/mqtt.md#frigateevents).

|

It is generally recommended to trigger notifications based on the `frigate/events` mqtt topic. This provides the event_id needed to fetch [thumbnails/snapshots/clips](../integrations/home-assistant.md#notification-api) and other useful information to customize when and where you want to receive alerts. The data is published in the form of a change feed, which means you can reference the "previous state" of the object in the `before` section and the "current state" of the object in the `after` section. You can see an example [here](../integrations/mqtt.md#frigateevents).

|

||||||

|

|

||||||

@ -45,7 +45,7 @@ automation:

|

|||||||

https://your.public.hass.address.com/api/frigate/notifications/{{trigger.payload_json["after"]["id"]}}/thumbnail.jpg

|

https://your.public.hass.address.com/api/frigate/notifications/{{trigger.payload_json["after"]["id"]}}/thumbnail.jpg

|

||||||

tag: '{{trigger.payload_json["after"]["id"]}}'

|

tag: '{{trigger.payload_json["after"]["id"]}}'

|

||||||

when: '{{trigger.payload_json["after"]["start_time"]|int}}'

|

when: '{{trigger.payload_json["after"]["start_time"]|int}}'

|

||||||

entity_id: camera.{{trigger.payload_json["after"]["camera"]}}

|

entity_id: camera.{{trigger.payload_json["after"]["camera"] | replace("-","_") | lower}}

|

||||||

mode: single

|

mode: single

|

||||||

```

|

```

|

||||||

|

|

||||||

|

|||||||

@ -84,3 +84,61 @@ There are many ways to authenticate a website but a straightforward approach is

|

|||||||

</Location>

|

</Location>

|

||||||

</VirtualHost>

|

</VirtualHost>

|

||||||

```

|

```

|

||||||

|

|

||||||

|

## Nginx Reverse Proxy

|

||||||

|

|

||||||

|

This method shows a working example for subdomain type reverse proxy with SSL enabled.

|

||||||

|

|

||||||

|

### Setup server and port to reverse proxy

|

||||||

|

|

||||||

|

This is set in `$server` and `$port` this should match your ports you have exposed to your docker container. Optionally you listen on port `443` and enable `SSL`

|

||||||

|

|

||||||

|

```

|

||||||

|

# ------------------------------------------------------------

|

||||||

|

# frigate.domain.com

|

||||||

|

# ------------------------------------------------------------

|

||||||

|

|

||||||

|

server {

|

||||||

|

set $forward_scheme http;

|

||||||

|

set $server "192.168.100.2"; # FRIGATE SERVER LOCATION

|

||||||

|

set $port 5000;

|

||||||

|

|

||||||

|

listen 80;

|

||||||

|

listen 443 ssl http2;

|

||||||

|

|

||||||

|

server_name frigate.domain.com;

|

||||||

|

}

|

||||||

|

```

|

||||||

|

|

||||||

|

### Setup SSL (optional)

|

||||||

|

|

||||||

|

This section points to your SSL files, the example below shows locations to a default Lets Encrypt SSL certificate.

|

||||||

|

|

||||||

|

```

|

||||||

|

# Let's Encrypt SSL

|

||||||

|

include conf.d/include/letsencrypt-acme-challenge.conf;

|

||||||

|

include conf.d/include/ssl-ciphers.conf;

|

||||||

|

ssl_certificate /etc/letsencrypt/live/npm-1/fullchain.pem;

|

||||||

|

ssl_certificate_key /etc/letsencrypt/live/npm-1/privkey.pem;

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

### Setup reverse proxy settings

|

||||||

|

|

||||||

|

Thhe settings below enabled connection upgrade, sets up logging (optional) and proxies everything from the `/` context to the docker host and port specified earlier in the configuration

|

||||||

|

|

||||||

|

```

|

||||||

|

proxy_set_header Upgrade $http_upgrade;

|

||||||

|

proxy_set_header Connection $http_connection;

|

||||||

|

proxy_http_version 1.1;

|

||||||

|

|

||||||

|

access_log /data/logs/proxy-host-40_access.log proxy;

|

||||||

|

error_log /data/logs/proxy-host-40_error.log warn;

|

||||||

|

|

||||||

|

location / {

|

||||||

|

proxy_set_header Upgrade $http_upgrade;

|

||||||

|

proxy_set_header Connection $http_connection;

|

||||||

|

proxy_http_version 1.1;

|

||||||

|

}

|

||||||

|

|

||||||

|

```

|

||||||

|

|||||||

@ -3,7 +3,7 @@ id: stationary_objects

|

|||||||

title: Avoiding stationary objects

|

title: Avoiding stationary objects

|

||||||

---

|

---

|

||||||

|

|

||||||

Many people use Frigate to detect cars entering their driveway, and they often run into an issue with repeated events of a parked car being repeatedly detected over the course of multiple days (for example if the car is lost at night and detected again the following morning.

|

Many people use Frigate to detect cars entering their driveway, and they often run into an issue with repeated notifications or events of a parked car being repeatedly detected over the course of multiple days (for example if the car is lost at night and detected again the following morning).

|

||||||

|

|

||||||

You can use zones to restrict events and notifications to objects that have entered specific areas.

|

You can use zones to restrict events and notifications to objects that have entered specific areas.

|

||||||

|

|

||||||

@ -15,6 +15,12 @@ Frigate is designed to track objects as they move and over-masking can prevent i

|

|||||||

|

|

||||||

:::

|

:::

|

||||||

|

|

||||||

|

:::info

|

||||||

|

|

||||||

|

Once a vehicle crosses the entrance into the parking area, that event will stay `In Progress` until it is no longer seen in the frame. Frigate is designed to have an event last as long as an object is visible in the frame, an event being `In Progress` does not mean the event is being constantly recorded. You can define the recording behavior by adjusting the [recording retention settings](../configuration/record.md).

|

||||||

|

|

||||||

|

:::

|

||||||

|

|

||||||

To only be notified of cars that enter your driveway from the street, you could create multiple zones that cover your driveway. For cars, you would only notify if `entered_zones` from the events MQTT topic has more than 1 zone.

|

To only be notified of cars that enter your driveway from the street, you could create multiple zones that cover your driveway. For cars, you would only notify if `entered_zones` from the events MQTT topic has more than 1 zone.

|

||||||

|

|

||||||

See [this example](../configuration/zones.md#restricting-zones-to-specific-objects) from the Zones documentation to see how to restrict zones to certain object types.

|

See [this example](../configuration/zones.md#restricting-zones-to-specific-objects) from the Zones documentation to see how to restrict zones to certain object types.

|

||||||

|

|||||||

@ -295,3 +295,41 @@ Get ffprobe output for camera feed paths.

|

|||||||

### `GET /api/<camera_name>/ptz/info`

|

### `GET /api/<camera_name>/ptz/info`

|

||||||

|

|

||||||

Get PTZ info for the camera.

|

Get PTZ info for the camera.

|

||||||

|

|

||||||

|

### `POST /api/events/<camera_name>/<label>/create`

|

||||||

|

|

||||||

|

Create a manual API with a given `label` (ex: doorbell press) to capture a specific event besides an object being detected.

|

||||||

|

|

||||||

|

**Optional Body:**

|

||||||

|

|

||||||

|

```json

|

||||||

|

{

|

||||||

|

"subLabel": "some_string", // add sub label to event

|

||||||

|

"duration": 30, // predetermined length of event (default: 30 seconds) or can be to null for indeterminate length event

|

||||||

|

"include_recording": true, // whether the event should save recordings along with the snapshot that is taken

|

||||||

|

"draw": {

|

||||||

|

// optional annotations that will be drawn on the snapshot

|

||||||

|

"boxes": [

|

||||||

|

{

|

||||||

|

"box": [0.5, 0.5, 0.25, 0.25], // box consists of x, y, width, height which are on a scale between 0 - 1

|

||||||

|

"color": [255, 0, 0], // color of the box, default is red

|

||||||

|

"score": 100 // optional score associated with the box

|

||||||

|

}

|

||||||

|

]

|

||||||

|

}

|

||||||

|

}

|

||||||

|

```

|

||||||

|

|

||||||

|

**Success Response:**

|

||||||

|

|

||||||

|

```json

|

||||||

|

{

|

||||||

|

"event_id": "1682970645.13116-1ug7ns",

|

||||||

|

"message": "Successfully created event.",

|

||||||

|

"success": true

|

||||||

|

}

|

||||||

|

```

|

||||||

|

|

||||||

|

### `PUT /api/events/<event_id>/end`

|

||||||

|

|

||||||

|

End a specific manual event without a predetermined length.

|

||||||

|

|||||||

19

docs/docs/integrations/third_party_extensions.md

Normal file

19

docs/docs/integrations/third_party_extensions.md

Normal file

@ -0,0 +1,19 @@

|

|||||||

|

---

|

||||||

|

id: third_party_extensions

|

||||||

|

title: Third Party Extensions

|

||||||

|

---

|

||||||

|

|

||||||

|

Being open source, others have the possibility to modify and extend the rich functionality Frigate already offers.

|

||||||

|

This page is meant to be an overview over additions one can make to the home NVR setup. The list is not exhaustive and can be extended via PR to the Frigate docs.

|

||||||

|

|

||||||

|

:::caution

|

||||||

|

|

||||||

|

This page does not recommend or rate the presented projects.

|

||||||

|

Please use your own knowledge to assess and vet them before you install anything on your system.

|

||||||

|

|

||||||

|

:::

|

||||||

|

|

||||||

|

## [Double Take](https://github.com/jakowenko/double-take)

|

||||||

|

|

||||||

|

[Double Take](https://github.com/jakowenko/double-take) provides an unified UI and API for processing and training images for facial recognition.

|

||||||

|

It supports automatically setting the sub labels in Frigate for person objects that are detected and recognized.

|

||||||

@ -13,7 +13,7 @@ module.exports = {

|

|||||||

themeConfig: {

|

themeConfig: {

|

||||||

algolia: {

|

algolia: {

|

||||||

appId: 'WIURGBNBPY',

|

appId: 'WIURGBNBPY',

|

||||||

apiKey: '81ec882db78f7fed05c51daf973f0362',

|

apiKey: 'd02cc0a6a61178b25da550212925226b',

|

||||||

indexName: 'frigate',

|

indexName: 'frigate',

|

||||||

},

|

},

|

||||||

docs: {

|

docs: {

|

||||||

|

|||||||

1111

docs/package-lock.json

generated

1111

docs/package-lock.json

generated

File diff suppressed because it is too large

Load Diff

@ -14,8 +14,8 @@

|

|||||||

"write-heading-ids": "docusaurus write-heading-ids"

|

"write-heading-ids": "docusaurus write-heading-ids"

|

||||||

},

|

},

|

||||||

"dependencies": {

|

"dependencies": {

|

||||||

"@docusaurus/core": "^2.4.0",

|

"@docusaurus/core": "^2.4.1",

|

||||||

"@docusaurus/preset-classic": "^2.4.0",

|

"@docusaurus/preset-classic": "^2.4.1",

|

||||||

"@mdx-js/react": "^1.6.22",

|

"@mdx-js/react": "^1.6.22",

|

||||||

"clsx": "^1.2.1",

|

"clsx": "^1.2.1",

|

||||||

"prism-react-renderer": "^1.3.5",

|

"prism-react-renderer": "^1.3.5",

|

||||||

|

|||||||

@ -37,6 +37,7 @@ module.exports = {

|

|||||||

"integrations/home-assistant",

|

"integrations/home-assistant",

|

||||||

"integrations/api",

|

"integrations/api",

|

||||||

"integrations/mqtt",

|

"integrations/mqtt",

|

||||||

|

"integrations/third_party_extensions",

|

||||||

],

|

],

|

||||||

Troubleshooting: [

|

Troubleshooting: [

|

||||||

"troubleshooting/faqs",

|

"troubleshooting/faqs",

|

||||||

|

|||||||

@ -1,13 +1,14 @@

|

|||||||

import faulthandler

|

import faulthandler

|

||||||

from flask import cli

|

|

||||||

|

|

||||||

faulthandler.enable()

|

|

||||||

import threading

|

import threading

|

||||||

|

|

||||||

threading.current_thread().name = "frigate"

|

from flask import cli

|

||||||

|

|

||||||

from frigate.app import FrigateApp

|

from frigate.app import FrigateApp

|

||||||

|

|

||||||

|

faulthandler.enable()

|

||||||

|

|

||||||

|

threading.current_thread().name = "frigate"

|

||||||

|

|

||||||

cli.show_server_banner = lambda *x: None

|

cli.show_server_banner = lambda *x: None

|

||||||

|

|

||||||

if __name__ == "__main__":

|

if __name__ == "__main__":

|

||||||

|

|||||||

@ -1,16 +1,16 @@

|

|||||||

import logging

|

import logging

|

||||||

import multiprocessing as mp

|

import multiprocessing as mp

|

||||||

from multiprocessing.queues import Queue

|

|

||||||

from multiprocessing.synchronize import Event as MpEvent

|

|

||||||

import os

|

import os

|

||||||

import shutil

|

import shutil

|

||||||

import signal

|

import signal

|

||||||

import sys

|

import sys

|

||||||

from typing import Optional

|

|

||||||

from types import FrameType

|

|

||||||

import psutil

|

|

||||||

|

|

||||||

import traceback

|

import traceback

|

||||||

|

from multiprocessing.queues import Queue

|

||||||

|

from multiprocessing.synchronize import Event as MpEvent

|

||||||

|

from types import FrameType

|

||||||

|

from typing import Optional

|

||||||

|

|

||||||

|

import psutil

|

||||||

from peewee_migrate import Router

|

from peewee_migrate import Router

|

||||||

from playhouse.sqlite_ext import SqliteExtDatabase

|

from playhouse.sqlite_ext import SqliteExtDatabase

|

||||||

from playhouse.sqliteq import SqliteQueueDatabase

|

from playhouse.sqliteq import SqliteQueueDatabase

|

||||||

@ -27,11 +27,13 @@ from frigate.const import (

|

|||||||

MODEL_CACHE_DIR,

|

MODEL_CACHE_DIR,

|

||||||

RECORD_DIR,

|

RECORD_DIR,

|

||||||

)

|

)

|

||||||

from frigate.object_detection import ObjectDetectProcess

|

from frigate.events.cleanup import EventCleanup

|

||||||

from frigate.events import EventCleanup, EventProcessor

|

from frigate.events.external import ExternalEventProcessor

|

||||||

|

from frigate.events.maintainer import EventProcessor

|

||||||

from frigate.http import create_app

|

from frigate.http import create_app

|

||||||

from frigate.log import log_process, root_configurer

|

from frigate.log import log_process, root_configurer

|

||||||

from frigate.models import Event, Recordings, Timeline

|

from frigate.models import Event, Recordings, Timeline

|

||||||

|

from frigate.object_detection import ObjectDetectProcess

|

||||||

from frigate.object_processing import TrackedObjectProcessor

|